Artifacts Log 3: Synthetic math and Magpie datasets, another 1T param model, and many Mistral models

Artifacts ~124 and on for the year.

Reminder — no audio for these posts. Previous Issues can be found here.

This is the first data-heavy issue of Artifacts Log, which is what is needed for keeping the gap from closed to open models roughly stable. Especially in post-training, the lack of datasets (especially at scale), is by far and away the biggest factor holding open recipes back. For example, the most popular post-training dataset, UltraFeedback, has only about 50 thousand samples. Llama 3.1 is rumored to use million(s) of datapoints at least.

This series is pretty useful for me, and hopefully to you. The craft of releasing an open model is getting refined before us, and the artifacts out there are continuing to mature.

I’m going to start paywalling half of this series. They’re mostly additions to Interconnects, rather than the core product, and paywalling them will give me valuable data (and of course money) along the way to expanding and improving Interconnects.

In other news, CA SB 1047 is going into House committee this week. The press machines on both sides, for and against, will be going strong. Some readings you may consider on the matter.

My post, where I’m mostly concerned about the use of thresholds and how to handle downstream accountability for open weight models (my personal views).

My interview with Dean Ball (author of Hyperdimensional) on the bill and related topics.

Anthropic’s proposed amendments to the bill.

Elsewhere from me

A few quick updates in the post-training space before I move on to the primary part of the post (you’ll see one of the datasets below).

I gave a talk for my recent work on synthetic data, Self-Directed Synthetic Dialogues and Revisions, where I discussed a workflow for constructing multi-turn synthetic datasets with open language models (slides). I include a roundup at the end on a bunch of datasets covered in this post (and previous issues): Magpie, Nvidia’s Daring Anteater, Personas, and more.

I gave a short talk on our work from earlier this year on DPO vs. PPO (slides). The correct way to look at this is that data rather than method is the best way to improve post-training. If you do max out data, then switch to PPO.

We released some simple Gradio chat demo tools at Ai2. This includes tools for side-by-side demos, safety filter integration (like Llama Guard), saving data, etc. Lots of little things that would be too burdensome to add to vanilla Gradio.

OLMo-1B-0724-hf by allenai: We updated our OLMo 7B model with some small improvements to training data and annealing techniques. A pretty minor bump, but we have some exciting new models coming soon. An interesting thing I’ve learned about pretraining is how loss spikes often relate to “skipped tokens,” making the models worse at a fixed compute budget. This is because the gradients is loss spikes get clipped and the data effectively does nothing.

Datasets

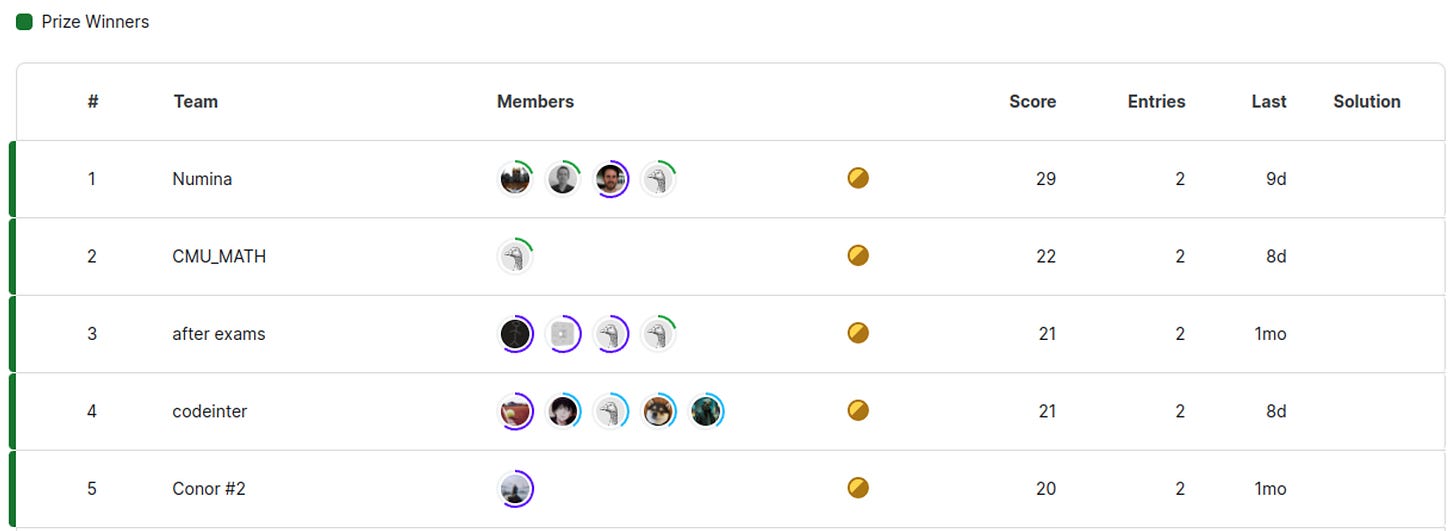

NuminaMath-TIR by AI-MO: This is a synthetic math dataset (GPT-4 completions) with tool-use to verify the outputs. This is exactly the type of data I think can cause huge gains in the open. It was used in a winning submission (by some of my former colleagues at HuggingFace) for a math competition. More items can be found in the collection.

Daring-Anteater by nvidia: This is a synthetic dataset used to train Nemotron 340B, containing conversations, structured JSON output, verifiable instructions, math, roleplay, and more. We found it to be very useful.

Magpie-Pro-MT-300K-v0.1 by Magpie-Align: A super interesting synthetic dataset where clever manipulation of special tokens has models create prompts themselves (and then completions). There’s also magpie-ultra-v0.1 by argilla, which is a version of this with Llama 405b and filtering. Read more about the Magpie method on Ahead of AI.

PersonaHub by proj-persona: This is a very large synthetic dataset of prompts with system prompts that is released as a part of a project that is hoping to create “1 billion personas.” This seems like a decently diverse prompt dataset, but if nothing else helps you track what major synthetic datasets look like.

hungarian_national_hs_finals_exam by keirp: An interesting evaluation dataset for math exams. When Grok-1 was released, this was their held-out set in face of GSM8k and MATH contamination. Mostly a funny find — seems like they’re transcribed by hand.

xlam-function-calling-60k by Salesforce: A high-quality (including human verification and filtering) function calling dataset. Early seeds of open-source language model agents.

Infinity-Instruct by BAAI: A new 1 million plus instruction set by compiling existing datasets, tagging them by category, filtering, and modification. I think there are a lot of projects in the open source like this, but it’s not easy to keep track of.

Models

Base

SmolLM-1.7B by HuggingFaceTB: HuggingFace released some decent small language models (135M, 350M, and this). Great to have more attention here. They also released a very fun demo that runs in your browser.

DCLM-7B by apple: Apple released a baseline for the open collaboration DataComp for Language Models (DCLM). This is a stronger model than OpenELM was, so let’s see where they take this.