Where 2024’s “open GPT4” can’t match OpenAI’s

And why the comparisons don't really matter. Repeated patterns in the race for reproducing ChatGPT, another year of evaluation crises, and people who will take awesome news too far.

With Google Gemini and Mixtral, we know we’re close to a model on paper that’s similar to GPT4 on most normal large language model (LLM) evaluation tasks. While I recently was writing about how Big Tech’s LLM evaluations are marketing at this point, that’ll hold for open model providers this year once they match the scores in the GPT4 report too. The separation of on-paper evaluations and interactive evaluations has many pros and cons.

While it’ll be a great milestone for an openly available LLM to have the same evaluation scores as GPT4, this is only the first step in the journey. It’ll be another step towards the two models being developed in very different directions. Open models are generally just weights and closed models are extensive Ml systems.

The next step is to know how to fine-tune the model to be truly as useful as GPT4 is, which we’re a long way from. I’ve even heard that GPT4 before its alignment tuning isn’t very useful at all. While pretraining is still most of the work to getting models to the finish line, reinforcement learning from human feedback (RLHF) has long been a necessary one too.

In short, the open capabilities of RLHF and all methods of preference fine-tuning are severely lacking their closed counterparts. Direct Preference Optimization (DPO), the algorithm providing boosts to usability, AlpacaEval, and MT Bench for open chat models isn’t a solution it’s a starting point (as I’ve written about extensively). We have a lot of work to do to truly have local chat agents at GPT4 quality. Primarily, I would recommend digging into data and continuing to expand evaluation tools centered around these methods. We’re just pushing the first few rotations around on the flywheel and efforts on data and evaluation tend to compound the most, instead of methods with vibes-based evaluation. This is epitomized by the fact that most fine-tuning data is derivative of GPT4 or a related model like Claude. OpenAI’s John Schulman already gave a very good talk on why training on distilled data can limit the performance of a model.

All of this analysis is grounded in the original version of GPT4 and doesn’t account for the fact that GPT4-Turbo is a step ahead in many benchmarks (most importantly Chatbot Arena).

Matching GPT4 benchmarks in only a year, which would be in March or April, would be a huge achievement. I just don’t want people to overstate the claims. Second, we shouldn’t be too worried about vibes-based evaluations for RLHF, which is still needed in the top labs to figure out if a certain model passes the sniff test. We should just be worried about not taking our blindspots into account.

Models vs. products

There’s another level to this when you start comparing something like ChatGPT4 (which is an odd naming scheme) to the base model itself that is weights-available and similar in performance to GPT4. ChatGPT is a product and the comparisons we’ll be making are to a model. Products make it easier to use models. I’m not an expert on LLM serving, prompting, and control, but I’m fairly sure there’s more than meets the eye behind the ChatGPT box (in addition to just safety). This apples-to-oranges vibe is why it’s so frustrating to see so many people touting ChatGPT as dominant over model releases from academia and beyond — they’re just different things. We should treat them as such.

The clearest example is in safety. It’s a reasonable idea to release a model that refuses the most offensive requests, but that makes the model a little bit harder to train. Systems like ChatGPT handle a lot of these requests with another machine learning system after the outputs. They look at the stream of incoming tokens and decide if the generating LLM’s outputs are suitable. The separation of tasks in modular systems and products will only get more nuanced from here for the likes of OpenAI and Google, so let’s not flatter them by giving their closed LLM systems too much focus on the development of open alternatives.

Closed companies are literally incentivized to train on high-quality test sets if they have their own internal evaluations. Open weights make it much harder to get away with this, but it’s normally not worth it (people figure it out).

The final twist in this tale is compute, which (Chat)GPT4 uses a ton of. While open models are getting bigger rapidly, the real dynamic to watch for is the progress on local models: The compute accessible to open ML players is growing proportional to the pace that GPUs in local machines grow. I’ll cover this more in the future, when I touch base on moats again.

RLHF progress: Revisiting Llama 2’s release and potential in 2024

Was browsing r/locallama before Christmas (as instructed by karpathy and others), and this got me in the mood for my favorite activities: criticizing those whose values I sympathize with so they have a better chance to fix their mistakes.

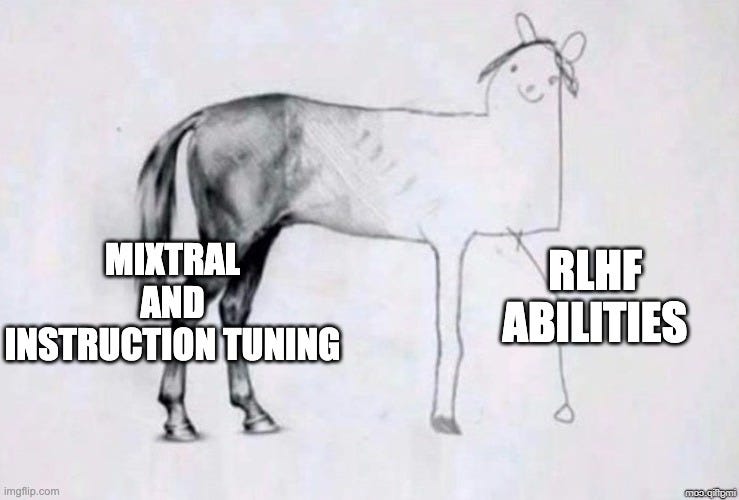

Whether or not we like it, the state of open abilities in RLHF fits the horse drawing meme format perfectly:

The gap is represented in how I’m sort of the face of open RLHF, not someone who has been doing this for 5 years – all of these people are under lock and key at OpenAI, Anthropic, DeepMind, etc. or building a startup to try and capitalize on how valuable this area is.

We’re making progress that the narrower-minded scale recipe wouldn’t try, like DPO. Meta’s Llama 2 was the best chance we had at closing the gap, but Meta’s RLHF code nor data was released. With this data and code, we would’ve had a much better time answering the questions of do we need RL for RLHF or does off-policy data works in RLHF? At a practical level, this not being released means that we’re just starting to gather the requisite groups to try and get initial answers. One large hyperparameters sweep of DPO training on the Llama 2 fine-tuning dataset would reduce the error bars substantially. I’d be super excited to see DPO included in the Llama 3 release, but we need access to code and data to answer the questions!

One of the quirks of Llama 2 chat is that they presented way more information on RLHF than anything else in the model due to the guise of safety. The publish more about alignment track that Anthropic pioneered and continues is pretty odd for a company priding itself on openness as a business strategy. Regardless, this sentence was striking in the paper:

Meanwhile reinforcement learning, known for its instability, seemed a somewhat shadowy field for those in the NLP research community. However, reinforcement learning proved highly effective, particularly given its cost and time effectiveness.

Llama-2-chat never was loved by the community. I am wondering if this was backlash to safety or if it was something they got wrong in the training. My understanding is that the overly-safe behavior was mostly mitigated by updating the system prompt and that it wasn’t a lasting problem. This isn’t mirrored in its usage in my mind, where it is never mentioned anymore. People have a short attention span for models that don’t pass the sniff test.

Llama 3 chat will be extremely telling as to whether they share the same, more, or less about their RLHF processes. Let’s keep our fingers crossed. I hope it’s more than a high-level signal for us to point at and read between the lines. Releasing all the artifacts would transform the landscape of open RLHF, which goes to show how precarious of a situation it is.

In 2024 I expect the instruction variants the Mistral releases to get better now that they’re in the API business — hopefully, this doesn’t make them the mirror of Meta’s openness approach, where they release nothing about RLHF because it’s part of their business model. What was a precarious balance in 2023 could easily be an area of strength for open ML. Make sure you get your friends at Mistral and Meta onboard for rounding up on RLHF releases.

At its best, open values succeed by having a plurality of options in many models and datasets. This is the ideal we should be approaching.

Smaller scale open RLHF

On the other side of RLHF models, i.e. those without hundreds of millions of dollars in VC or advertising money, we have the Zephyr and Tulu’s of the world, which were all built on different variants of this synthetic UltraFeedback dataset. In short, this dataset aggregates instructions from many popular synthetic datasets and has GPT4 rate them. I don’t know why it does so much better, but if I had to guess it would be mostly around the quality of synthetic instruction data improving constantly throughout the year. This, combined with the fact that GPT4 was a huge step above GPT3.5 in its ability to act as a judge (GPT3.5 couldn’t even do basic formatting like exporting an integer reliably), finally passed through the system.

The likes of HuggingFace, Allen AI, Stanford, etc. are leading with training. This group is very different and way more narrow than instruction fine-tuning (IFT) where everyone where many prominent individuals and research labs sprouted up (such as Nous Research, Alignment Lab, Disco, The Bloke, and many more I missed). It seems to take a bit bigger teams and a bit more computing to find the settings that work.

As I was saying, Mistral’s instruct models seem to be quickly passed by all these smaller players in 2023. It seems like they’re focusing on pretraining for now, which is smart and more labor-intensive.

I still stand by what Raj and I have been saying on Twitter for a while: there’s a difference between doing RLHF and doing RLHF well. The latter still takes teams of about 5 plus, with a strong engineering focus. We’re getting closer to people doing that, but I don’t expect throwing DPO at more datasets in an ad-hoc manner to solve that in 2024. By the end of 2023, the team size will probably drop to 3 too, I love our rapid progress!

Opportunities

I’m most excited about using bigger models in RLHF and getting better pipelines flowing (potentially as community efforts). The former is mostly due to the way that bigger models tend to be more nuanced and responsive in conversation. Bigger models tend to have a larger variety of responses and to be a bit easier to work with. This type of behavior is why I still use ChatGPT4 regularly when coding.

The latter is the one that more people have access to: data pipelines. I’ve been following this company for a long time, Argilla, and they seem to be going in the right direction: building easy-to-use and cheap tools for data collection and filtering. They’ve been demonstrating how to use it by making small improvements to popular models like Zephyr. As this direction starts to pay off for them, others in the community will catch on, and I could see some benefits compound in wild ways by the end of 2024. 2024 is hopefully the last year where we have to be in the dark when it comes to preference data.

Of course, there are places like Scale AI that have 6- to 8-figure contracts with the biggest labs, but until they’re more open about their insights and methods, I don’t expect much. Scale, we’d love it if you proved me wrong! From an incentives perspective, it’s always hard to move labor off of highly lucrative projects to build something that could compete with your bottom line in 2-4 years. This is the case that preference data is commoditized, but the community + Argilla has a long way to go until we know how the most advanced tricks of RLHF data like iterative data collection and model version updating (as discussed in John Schulman’s Proxy Objectives talk).

Commentary

People are excited about open ML right now, I get it, but even friends of the pod will be corrected if they’re leading people astray. This was the latest tweet to really annoy me:

Keep reading with a 7-day free trial

Subscribe to Interconnects to keep reading this post and get 7 days of free access to the full post archives.