Scaling realities

Both stories are true. Scaling still works. OpenAI et al. still have oversold their promises.

Notes: this is a cleaned version of a long-post I shared on socials earlier this week. Second, there are a few final edits for clarity not reflected in the voiceover.

There is a lot of discussion on whether "scaling is done," with stories from The Information saying that the latest GPT models aren't showing what OpenAI wanted while Sam Altman still parades around saying AGI is near (and a similar, more detailed story from Bloomberg on Gemini). We’re still waiting for Gemini 1.5 Ultra and Claude 3.5 Opus, let alone the next indexing.

Both narratives can be true:

Scaling is still working at a technical level.

The rate of improvement for users is slowing. Especially the average user.

Without the performance gains clearly visible to users, launching the next scale of models is a strategic gamble.

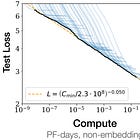

Ultimately, a scaling law is a technical definition between test loss and training compute. This test loss does not necessarily need to be correlated with performance — the central motivation of my recent post on scaling's impacts, but one that got caught up in a different focus on the AGI strawman.

This post is just about one specific reality: Scaling works and that doesn’t solve all the economic problems for AI.

Inside the labs, I've heard nothing to say that scaling laws are stopping. If anything, the curves are starting to bend slightly away from a linear-looking power law, but by spending more, we're straightening them out.

The biggest blockers to training these models to test the scaling laws are physical constraints — GPU allocation, power supply, permitting, etc. Some of these are modeling questions, like how to batch together training updates from multiple data centers or how to get more usable synthetic training data, but most of them are not related to the definition of scaling laws.

Even with scaling laws "working,” the perception of the final post-trained GPT-5, Claude 4, Gemini 2 class models can be underwhelming. This is because our goalposts are based on certain types of chat tasks.

A certain psychological conditioning happens for the best language models now, which has happened forever with humans. Because a language model, or human, sounds smart, we assume they can accomplish a diverse spectrum of tasks. Language models are getting robustness in capabilities we’ve largely already attributed to them. We’re backfilling and pushing forward.

For general chat tasks, the models went from unusable to compelling but buggy with GPT 3.5, the o.g. ChatGPT. With GPT-4, normal chat tasks and coding and math became viable. With GPT-5, what is it expected to unlock? It's not clear to me what a better chat model would look like. Robustness is hard to get excited about.

OpenAI has backed itself into a corner with its messaging. Their messaging around AGI soon made people expect this to be in a form factor they know. Really, the AGI they're building is not contingent on GPT-5 being mind-blowing. It's a system that will use GPT-5 as one part of it. It’ll be agents, like computer use, or even things that are entirely new form factors — agents that are dispatched on the web. These views of “AGI” are entirely separate from what ChatGPT is.

All of this is to say that I find both arguments to be true. Scaling is working, as the AI true believers keep saying whatever the media environment is. Still, the product limits of current ChatGPT forms are hitting limits where a bigger model may not be the answer. We need more specialized models. OpenAI’s o1 is one of the first forays into "something different." No one is making noises about that capping out this year or next.

With this, I still strongly believe there are way more products to be built with current AI that will unlock immense value. None of these trends doom the industry at large, but investments will slow if OpenAI et al. need to change their fundraising strategy.

We still have a huge capability overhang. Our ability to create specific capabilities in models is still growing very fast (e.g. by the expansion of post-training). I see a ton of low-hanging fruit and see no reason to be pessimistic about the trajectory of AI improvement, it is just important to ground where it is and what it is. This capability-product overhang takes two forms:

Powerful AI models can do a ton of things today that either a) we don’t know or b) we don’t know other people will value.

Scaling will keep making the models “better” in a technical sense, and we have no way to know what this may unlock.

All of us should be excited that companies are willing to risk their economic viability to explore this frontier. The benefits they find will last forever, whether or not the first batch of companies does.

Thanks to Alberto Romero at The Algorithmic Bridge and many friends in the Interconnects paid Discord server for discussions that cultivated this post. The recent Lex interview with Dario has more useful commentary on the topic. If you like this type of shorter and to the point post, let me know!