The latest open artifacts (#7): Alpaca era of reasoning models, China's continued dominance, and tons of multimodal advancements

Artifacts Log 7. It'll continue to be a fun spring for AI researchers and practitioners.

It’s one of those months where it feels like a year passes in the open-source ecosystem. Things are the liveliest they’ve been in quite some time, obviously thanks to DeepSeek R1. We’re here to map that out for you.

The biggest things to know are:

Tons of DeepSeek R1 datasets are appearing, knowing which one is best is nuanced.

China continues to release most of the strongest models across the AI stack with more permissive licenses.

Aside from the many models covered in this and the previous episode, Baidu has announced their plan to make their upcoming Ernie 4.5 model open source on June 30th, while making their premium tier free in April.

A lot of Chinese labs have started their presence on X (formerly Twitter), including Qwen, Hailuo, StepFun, and Hunyuan, among many others and made their models easily accessible with either HuggingFace Spaces or dedicated sites, like QwenLM Chat.

As usual, the artifacts in this post are available under this HuggingFace collection.

Our Picks

Mistral-Small-24B-Instruct-2501 by mistralai: Mistral released a new, open model. Aside from the size inflation, they announced in their blog post that they will move away from the Mistral Research License to Apache 2.0, the license they used for their first models, like Mistral 8B and Mixtral. This is big news for opening up more downstream use!

Qwen2.5-VL-7B-Instruct by Qwen: Qwen updated their vision models. Like the previous generation, they are among the strongest options out there and can rival their closed competitors. Besides the usual capabilities (like OCR, bounding boxes, and video understanding), this model iteration is capable of parsing documents into HTML. However, the licensing is all over the place: The 3B version uses the non-commercial Qwen research license, while the 7B model has the Apache 2.0 license; the 72B version has the Qwen license.

granite-3.2-8b-instruct-preview by ibm-granite: IBM is constantly churning out new (mostly small-ish) models, but is still a relatively unknown player among the model makers. This model is their first shot at a reasoning model and builds upon Granite 3.1 8B, which we covered in the previous episode. While a lot of reasoning models released recently rely on distillation from R1, IBM uses "own reinforcement learning-based techniques for triggering chain-of-thought reasoning across any domain", which does not need a teacher model as outlined in their blog. Furthermore, the reasoning mode of the model can be toggled on or off by setting a specific system message. It is obvious that the distinction between "normal" LLMs and "reasoning" LLMs will become non-existent in the future as RL becomes an increasingly big part of the training. Future models will learn when and how much inference should be spent before the final answer.

Llama-3.1-Tulu-3.1-8B by allenai: Ai2 released an updated version of Tülu. Trained on the same data as the previous version, but with GRPO (instead of PPO), the same algorithm used by R1. This results in better performance across the board, most notably in math benchmarks. It also is evidence against the argument that GRPO is "poor man’s PPO". Full reasoning models from Ai2 are still “coming soon.”

Links

Large-scale training resources:

Various Google DeepMind authors have released an in-depth guide on how to scale up Large Language Models. While the guide is mainly focused on Jax and TPUs, the learnings can be applied to other frameworks and hardware as well. Whispers are that this was a form of internal Gemini docs made ready for public viewing.

HuggingFace put out an Ultra-Scale Playbook which covers many of the same details. These are fantastic resources for learning pretraining tradeoffs and decision-making.

Jasmine Sun put together a good recap of “The DeepSeek Moment” content on Substack — mostly at this point, this is a good resource for finding more high-quality AI writing.

Two titans of post-training John Schulman and Barret Zoph (both formerly at OpenAI, now at Thinking Machines) gave a lecture on post-training. The slides are a good overview, and we hope the recording comes soon.

For other nerds of how internet culture and personal marketing work, this was the most coherent theory for how the modern internet shapes it — Creator Gravity.

If you’re into the weird side of language models, you should be following Sander’s explorations into the Claude tokenizer. It is not a standard BPE.

The Beijing Academy of Artificial Intelligence, which is mostly known for the BGE embedding models, has started an initiative to reproduce R1 openly in the spirit of projects like BigScience (known for BLOOM). The GitHub repository outlines their ambitious plans, including distributed pre-training of a DeepSeek MoE model supporting different AI chip architectures and the creation of open reasoning datasets. This fits with the obvious theme of China consistently leading on open-source AI right now, but we need to see what they ship.

Reasoning

Models

huginn-0125 by tomg-group-umd: A reasoning model trained to reason in latent space, opposed to the current breed of models which are trained to reason in tokens. The model uses recurrent blocks, which are running multiple times during inference to scale up the inference compute.

DeepScaleR-1.5B-Preview by agentica-org: A RL-tuned version of R1-Qwen2.5 1.5B, which improves the math performance compared to the original, distilled model significantly. One of their main findings is that the incorrect responses were three times longer than the correct ones.

DeepHermes-3-Llama-3-8B-Preview by NousResearch: Nous has released a preview of their reasoning model, which is distilled from R1. Similar to the IBM model, the reasoning mode is toggleable by changing the system prompt.

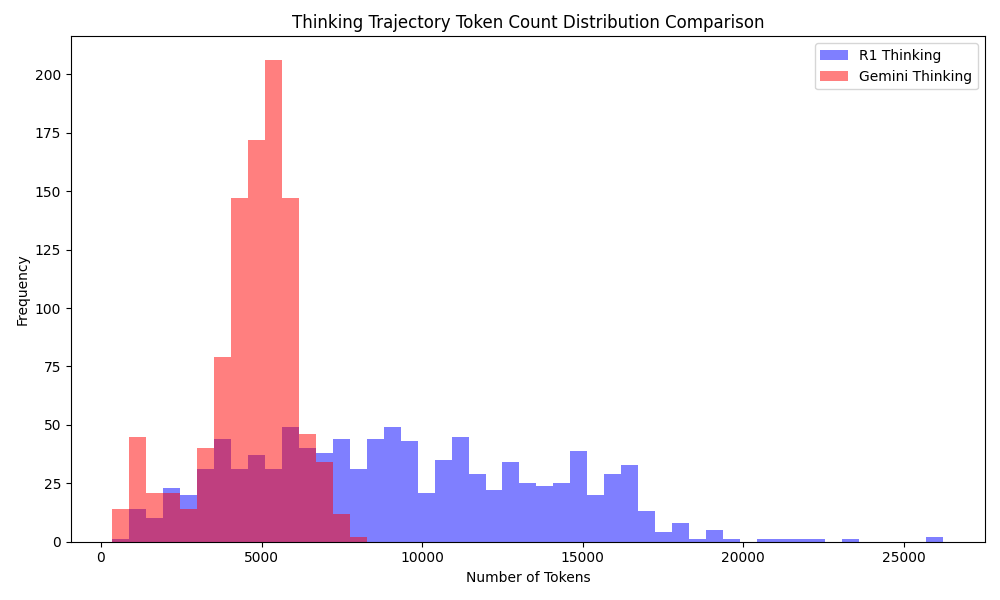

s1.1-32B by simplescaling: A fine-tuned version of Qwen2.5 32B trained on just 1,000 examples. This version uses R1-generated traces, while the first version uses traces generated by Gemini Flash Thinking. However, the authors found that the R1 traces are longer and more diverse than the Gemini traces.

Mistral-Small-24B-Instruct-2501-reasoning by yentinglin: A fine-tuned version of the new Mistral model on R1 traces. It surpasses other larger models on math benchmarks.

r1-1776 by perplexity-ai: A fine-tuned version of R1 to remove the Chinese censorship on China-critical topics. The resulting models’ performance is similar to the base model.

OREAL-7B by internlm (also 32B variant): A bit of a different take on the current rage of reasoning models. Quoting the model card: “Our method leverages best-of-N (BoN) sampling for behavior cloning and reshapes negative sample rewards to ensure gradient consistency. Also, to address the challenge of sparse rewards in long chain-of-thought reasoning, we incorporate an on-policy token-level reward model that identifies key tokens in reasoning trajectories for importance sampling.” There are more details in the paper, but mostly this goes to show that reward models (specifically, outcome reward models) are still important to reasoning and it isn’t that “explicit verification is all you need.”

llama3.1-8b_codeio_stage1 by hkust-nlp: The output of an interesting paper (from DeepSeek) on making reasoning training more efficient for code by transforming code to natural language and optimizing progress there.

Datasets

NuminaMath-1.5 by AI-MO: A new version of the popular NuminaMath dataset, which consists of various high school and competition math problems and their respective solutions.

OpenR1-Math-220k by open-r1: A subset of NuminaMath 1.5 with reasoning traces generated by DeepSeek R1.

stackexchange-question-answering by PrimeIntellect: Prime Intellect has released SYNTHETIC-1, a dataset collection of code and math problems with R1-generated answers.

OpenThoughts-114k by open-thoughts: A scale-up of the Bespoke-Stratos-17k dataset we’ve discussed in the previous episode. They now include code, science, and puzzles with generations from R1 in their dataset.

Tools

Lots of codebases are being spun up for RL training these days. Some of the first ones are libraries that implement verifiers. Two of these are:

Math-Verify from HuggingFace: Tools for extracting math answers from LLM text. This is very similar to what Ai2 uses for RLVR training with open-instruct.

Reasoning-Gym from Open-Thought: Verifiers for RL training across more domains. This library is a bit more complex, but it’s the most aggregated source of verifiers we’ve seen.

Verdict from haizelabs: A new repository/toolkit for scaling up compound calls and inference-time compute for LLM-as-a-judge workflows.