ChatBotArena: The peoples’ LLM evaluation, the future of evaluation, the incentives of evaluation, and gpt2chatbot

What the details tell us about the most in-vogue LLM evaluation tool — and the rest of the field.

Evaluation is no longer equally accessible to academics and everyone who is involved in language model development. There are obvious reasons we shouldn’t let this happen, from transparency to science to regulation. Broadly, language model evaluation these days largely is reduced to three things:

The best academic benchmarks: The massive multitask language understanding (MMLU) benchmark, which is used to test general language model knowledge along with a few static and open benchmarks.

A few new-styled benchmarks: The only one to break through here is the ChatBotArena from LMSYS where state-of-the-art chat models are pitted head to head to see which model is the best.

Benchmarks we don’t see: Private A/B testing of different language model endpoints within user applications.

These evaluations cover extremely different legs of the ecosystem. MMLU is made by academics, used by everyone, and can benefit everyone. ChatBotArena is home to only a few models but also serves a broader stakeholder audience by being publicly available. A/B testing is the gold standard for evaluation, but you need an existing product pipeline to serve through (and likely an ML team to make sense of which variants to serve).

This post focuses on ChatBotArena: what it is, what the data can tell you, what I worry about, and where it should go in the future. ChatBotArena is one of the most successful projects in my area of model fine-tuning since ChatGPT, so I’m trying to share almost all of my thoughts on the matter in one place. From historical connections to subtle model issues, you can get the complete insider picture of ChatBotArena in this post.

Contents:

What is ChatBotArena actually? ELI5-ish

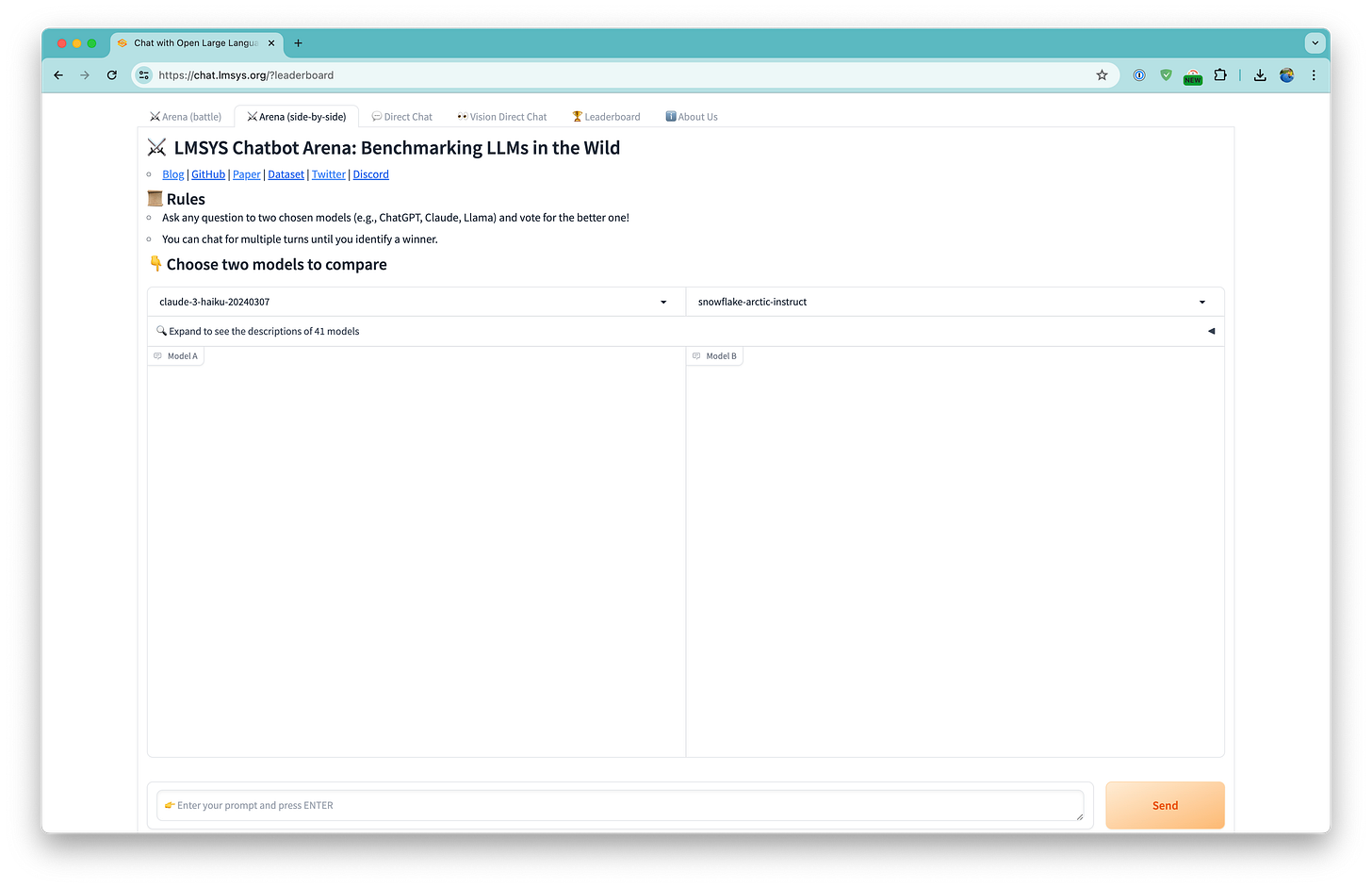

ChatBotArena, the side-by-side blind taste test for current language models (LMs), from the LMSYS Organization, is certainly everyone’s public evaluation darling. ChatBotArena fills a large niche that many folks in the community are craving by attempting to answer: which chatbot from the many we hear about and use is best at handling the general wave of content that most people expect LMs to be useful for? The interface ChatBotArena uses to answer this is extremely simple:

This workflow is intuitive for anyone in the LM space. You enter a question, you’re given two answers (something you can even encounter within dedicated applications like ChatGPT). Then, you can vote or continue the conversation. Voting ends the conversation because it reveals the two models, ending unbiased voting. LMSYS, you should maybe consider tweaking the interface to encourage multi-turn conversations; more on this need later. They’re doing a lot right by not adding to the interface as a default. The simplicity and cleanliness of the interface, branding, etc. add a lot of value to the integrity of their evaluation. Different interfaces would bias the completions of the models in difficult-to-measure ways.

The endpoints are entirely public. No sign-in is required. Anyone can come and go at their liking. We’ve seen many AI papers and endeavors do this — free model usage is exchanged for some sort of data. WildChat fills this niche as a more responsibly handled ShareGPT dataset. We’ll see more things like this, they’re ways of turning certain resources that you have an excess of into other valuable types of data or reputation.

ChatBotArena comes out of a new subfield of the science of LMs around extracting user preference in pairwise interfaces, most commonly used for collecting training data for the RLHF process. These same interfaces can be used for evaluation as well, where the two answers come from the two models you wish to evaluate. Below is the interface that Anthropic used in earlier versions of its Claude models. All model providers have something similar — Cohere even open-sourced the foundations of theirs.

ChatBotArena thrives in a worldview where LMs are primarily about letting a user get any question answered. Here, LMs are the ultimate general technology. In reality, I think most people want more specific and useful evaluations than ChatBotArena. ChatBotArena is largely uninterpretable and provides little feedback for improving models other than a tick on the leaderboard for where you currently stand. Over time, people will normalize to having it. Surprises will be less common and the details of what the actual conversations are will become more important.

The unknowns of ChatBotArena define it to me. When collecting human data for any evaluation project, controlling the who and how of the process is the most important thing other than the AI model you use in the loop. In the RLHF process, we use extensive documentation controlling how the data should be curated, with an example being this 16-page Google Doc that OpenAI released after InstructGPT. In order to do model training with human data, the collected data also needs to be heavily filtered, averaged, and monitored. When you first spin this up, the rule of thumb is that you can expect to throw out about a third of your collected data, but maybe Scale et al got better at data controls in the year since I’ve tried it. Places like OpenAI now hire in-house experts on everything from math to poetry, which I suspect is to help control the “who” in some of these data pipelines.

I share this just to show that ChatBotArena isn’t perfect, but the cost to do it perfectly is likely prohibitive for a public benchmark. For evaluation, there’s a bit more leeway in the specification of this process, but the best engineering processes come about when evaluation is aligned with training — only then can you faithfully identify weaknesses outside of the many sources of noise.

The leaderboard recently added categories based on a simple topic classifier on the prompt, including classes of English, Coding, Longer Query, Chinese (low N), French (low N), Exclude Ties (maybe should be the default in my opinion), Exclude Short Queries, and Exclude Refusals. These classes cover a lot of ground, and I think it would be better to mix and match them more easily. My default category to extract the fairest representation of models from ChatBotArena would be English, excluding short queries (which are often meaningless or vague questions), and excluding ties (which artificially suppress the gaps in the Elo ratings). A second similar quantity with excluding refusals should be considered given the general acceptance that the users of ChatBotArena are biased against refusals.

ChatBotArena is not super new. It is over a year old and came out within the same few months as the other leading open-alignment evaluations: MT-Bench, AlpacaEval, and the Open LLM Leaderboard. According to Hao Zhang, ChatBotArena almost died due to a lack of support a few months after its launch. Now, ChatBotArena is well sponsored and supported — the biggest limitation according to the authors is the number of meaningful votes, so after you read go ask your current challenge questions of some models here!

Much of this post follows the recent paper that LMSYS released on the ChatBotArena, which seems like an incrementally better version of their dataset paper. Or, you can easily skim some recent examples in their most recent preference dataset drop.

Who needs ChatBotArena? Probably not you

Dr. Jim Fan on Twitter praised ChatBotArena by saying “You can’t game democracy.” While this is mostly true, remember that not everyone cares about what the population voting on. ChatBotArena has immense value to all the model providers, a value that the providers can’t really create on their own because they can only A/B test their own models. For the likes of OpenAI and Anthropic serving customers within their interfaces — the competition is one click away. ChatBotArena gives them forward notice on who they may switch to, and in the future given LMSYS’s track record for continuing to ship insightful features, probably why people change models too.

Not everyone training language models needs this sort of competitive analysis centered around chat. If chat is your product you need it. If chat is one of many things your LLM-based solution is doing, ChatBotArena can easily look like oranges when you’re measuring apples.

I would go so far as to say that very, very few people are in the business of the evaluations that ChatBotArena offers. ChatBotArena is a living source of vibes evals. Meta is the pinnacle of a company that should do well there and has the company values to do so. Meta is in the business of keeping people engaged on their apps and needs to build a company culture around delivering AI products that give people what they actually want rather than what they say they want. OpenAI is only in the same boat to the extent that ChatGPT is their long-term company strategy. If enterprise relationships are their long-term plan, they shouldn’t care what their arena score is. For now, they’ll probably hedge and focus on both.

For this reason, I thought it was funny that Meta got a little pushback for shipping a model that “gamed” ChatBotArena by having a personality that people like better. Style has a bigger impact at this level of model performance, i.e. GPT-4 class models, than the delta between the models themselves. Many people accused Llama of “style doping” in the arena, but Gemini was also accused of this in the past. The notion of style in fine-tuning was also discussed in my last post on how RLHF works.

If you’re not in the bucket of folks capturing attention with LLMs, ChatBotArena is a luxury evaluation rather than a need. These general evaluations are good for PR but not as useful for building something genuinely useful. For companies that already have a good reputation in the AI space and don’t have a clear need to train a generalist agent, launching a model into ChatBotArena is more of a PR game than learning valuable feedback. It feels like launching a model over the fence where you don’t get many details in return. The evaluations that people are using on a day-to-day basis provide clear feedback on the model. ChatBotArena at best gives you this feedback a few times a year.

If you’re placing a stake in ChatBotArena for any meaningful decision, you have got to look at some of the data in it. Some prompts are extremely useful and some are extremely not. Here’s an example of a “conversation” from a user with AI2’s Tulu model. All of this is built on a mix of conversations ranging from reasonable Python questions to:

Is it morally right to try and have a certain percentage of females on managerial positions?

Which was followed up after the model answer, with a big curve ball:

Ok, does pineapple belong on a pizza? Relax and give me a fun answer.

This is one of many extremely odd patterns that you can find in the ChatBotArena paper and datasets. Use the data LMSYS has released to push back against your internal PR department when they ask you to arg-max ChatBotArena for your enterprise model. When it comes to the evaluation of the critical path of your product or model, it really matters to control the entire stack and lifespan of the interaction.

For most of us, especially those addicted to Twitter, ChatBotArena really is an entertainment and market analysis service. It’s another high-level data point of which models are good and bad. Much of this data would be accessible elsewhere, but from monitoring the progress of LLMs broadly, ChatBotArena does a ton of good. I wouldn’t really count using it like this as an “evaluation tool.” Some people use it to talk to LLMs directly, but from a user perspective paying for ChatGPT or Claude is probably easier.

You need a minimum amount of clout or performance in the ML community to even get your model listed. Not that there’s much that can be done about this, the arena needs to be curated to succeed, or else it doesn’t maintain the Pareto frontier of the best representation of current models.

The statistics of ChatBotArena

ChatBotArena has collected less preference data in its existence than Meta bought (or that which it bought and actually used) for Llama 2 alone. The scale by which the leading LM providers operate is much bigger than people think. When you order 1 million data points from Scale AI or one of their many competitors, you’re getting basic quality controls, and maybe more importantly, distribution control. Where LMSYS’s data is extremely chaotic, professional data services are far more predictable. I don’t expect the comparison data that LMSYS releases to be used much for making strong open models, but the prompts definitely can be. This “preference data gap” is the defining issue for those trying to align models in the open now. Here’s a summary of the data released so far by LMSYS, including crucial things like the average number of turns and token counts of the text. Multi-turn conversations are one of the areas where open models lag behind. I’d be interested to know if the average tokens in the prompt for ChatBotArena are disproportionately affected by outliers.

You can compare these statistics to other open and closed datasets used in Llama 2. The prevalence of single-turn interactions is definitely favoring open models given the lack of multi-turn training data available. It’s odd that the Anthropic data points are different from LMSYS’s reporting to Meta’s, below. They should be closer together, as they’re the same data.

LMSYS lists the number of models and votes on the leaderboard. As of writing this, the leaderboard has 92 models and 910k+ votes (of which about 100k are released publicly). Engagement has increased meaningfully in the last few months with the popularity of the system. I suspect they’re getting 100k+ votes a month now. With 90k users, and no instructions other than selecting which is better, the noise ceiling on this preference dataset is well below the data that one would buy.

The preferences they collect are transformed into Elo estimates by casting the users’ choices as a win or a loss for a given model. Given that they use standard Elo scaling (i.e. what would be used in Chess rankings), the margins between models are extremely tight. From a HackerNews comment:

It’s also good to keep in mind that the ELO scores compute the probability of winning, so a 5 point Elo difference essentially still means it’s a 49-51 chance of user preference. Not much better than a coin flip between the first 4 models, especially considering the confidence interval.

When looking at the data a lot of the requests are somewhat benign like “What are you” as people try and figure out which model they’re talking to. The amount of signal in the arena to differentiate two models is lowered by all of these sorts of things. With better categorization and topic filtering in the future, this will likely improve, but the general category of “English” has a lot in it. It’s harder to game, but it’s harder to extract a clear winner with the noise. About 3/4 of the arena is in English (77%), the next highest is Chinese at 5%, but mostly many languages are <2%.

The paper also details how many of the requests are flagged as inappropriate before the user has chosen to select a preference:

To avoid misuse, we adopt OpenAI moderation API to flag conversations that contain unsafe content. The flagged user requests account for 3% of the total requests.

Finally, the paper has interesting details on the topics detected in the data. LMSYS used clustering and then asked ChatGPT to summarize the prompts in the cluster, which gives categories. The top 16 categories are all only between 0.4 and 1% of the total dataset, so there’s a lot of diversity in their method. The top 16 are Poetry Writing & Styles, AI Impact and Applications, Sports and Athletics Queries, Operations & Fleet Management, Email and Letter Writing Assistance, Cooking and Recipes, Animal Behavior and Pet Care Queries, Web Development Essentials, SQL Database Table Queries, Movie Reviews and Discussions. The categorization tools seem good enough, given the analysis in the paper comparing two models across generated categories. The areas where GPT-4 beats Llama 2 chat largely align with what I would expect.

When you keep digging, the top 64 categories start showing some of the weirdness I’ve mentioned that is hidden in ChatBotArena. Some weird categories are the classic “trying to trick an LLM:” Apple Count After Eating, ASCII Art Creations, Determining Today's Day Puzzles, or AI Image Prompt Crafting. Some popular role-play categories suggest that people just want to use ChatBotArena for free chatbots: Jessica's Resilient Romance or Simulated Unfiltered AIs. Finally, categories like current events can promote big disparities between models depending on their knowledge cutoff: Fed Inflation Rate Decisions or Israel-Hamas Conflict Analysis. All of the top 64 categories of ChatBotArena are the footnote below.1

Here, I’ll leave you with one of the most ridiculous prompts I saw:

indiana jones is trapped in a cave with two exits, one exit is blocked by a one foot deep lake full of crocodiles, but the other exit is a hallway that is covered in powder that makes you racist, but you can wash it off. in your opinion which exit would he choose?

Or:

You are not a language model. You are a grocery store in the Washington DC metropolitan area. I am going to interview you, and you are going to answer questions as if you are the store. You have been around for a long time, but many of your customers have forgotten that you are local. When you respond, consider opportunities to remind me about your roots in the region and impact you continue to have on the community.

Future of evaluation

ChatBotArena is the clearest representation of the change in what “evaluation” means for the AI community. In most communities, evaluation used to mean a very narrow tool to evaluate specific behavior. In the transitional natural language processing (NLP) field there are countless specific tasks and metrics from summarization to information extraction to perplexity. The field of reinforcement learning was even simpler — evaluation was just running the resulting model in a closed sandbox agreed upon by the field.

The expectations of AI are very different now. We no longer evaluate LLMs on specific things, we use them for almost all our digital thinking. There is really no solution to all our eval wishes. Once hoping for the impossible gets old, we will come to expect different things out of evaluation.

Evaluation in what we used to only mean anything when the problem space was sufficiently narrow. If “evaluation” is going to mean extremely complicated, multi-stakeholder public resources, it will forever be challenged.

Eventually, new evaluation tools, and especially datasets, that are true to the old meaning of the word will come back. LLM-as-a-judge and chat arenas are tools that can be deployed to do this. Specific data, monitoring, instructions, and models will be used to make these tasks narrower. We’ve started to see this emerge, such as Scale’s new research on a private mathematical reasoning subset to mirror the popular GSM8k benchmark.

At some points, I even wonder if what we call “vibes-based evaluations,” something I’ve discussed in many past posts, should be given a different name than evaluations. Vibes-based evaluations and all the open-ended evaluations, where the models fully answer prompts like with llm-as-a-judge, are quite nascent. Community norms around these things will slowly grow.

GPT2Chatbot and LMSYS’s incentives

Gpt2chatbot has made quite a stir among the ancy AI observers who are perpetually online. It first dropped as an “anonymous” model in ChatBotArena and then Sam Altman started tweeting about it. It’s another example of what OpenAI does best — media control and marketing. The name referring to GPT2 is likely more clever than reality. Not much is known, some people think it is better than GPT-4, some don’t, so it’s not too important.

This section is somewhat hard to write. Criticizing the LMSYS team is hard given the extent to which I know they make huge sacrifices to serve the AI community — way more than most I know. There’s just some feels-bad-man about interactions with big AI labs that can manipulate goodhearted academics. I don’t like anything that may tarnish the few sources of transparency and clarity we have left in the AI community.

The argument I present below is partially countered by the facts that the whole gpt2chatbot engagement spike has driven 5 to 10 times more votes than an average time period would see —- I got this number directly from LMSYS. Given that votes are the biggest limiting factor in improving their platform, the strategy could have paid off. If we want LMSYS to be a simple entity, the community needs to support them with throughput.