Latest open artifacts (#18): Arcee's 400B MoE, LiquidAI's underrated 1B model, new Kimi, and anticipation of a busy month

Tons of useful "niche" models and anticipation of big releases coming soon.

January was on the slower side of open model releases compared to the record-setting year that was 2025. While there were still plenty of very strong and noteworthy models, most of the AI industry is looking ahead to models coming soon. There have been countless rumors of DeepSeek V4’s looming release and impressive capabilities alongside a far more competitive open model ecosystem.

In the general AI world, rumors for Claude Sonnet 5’s release potentially being tomorrow have been under debate all weekend. We’re excited for what comes next — for now, plenty of new open models to tinker with.

Our Picks

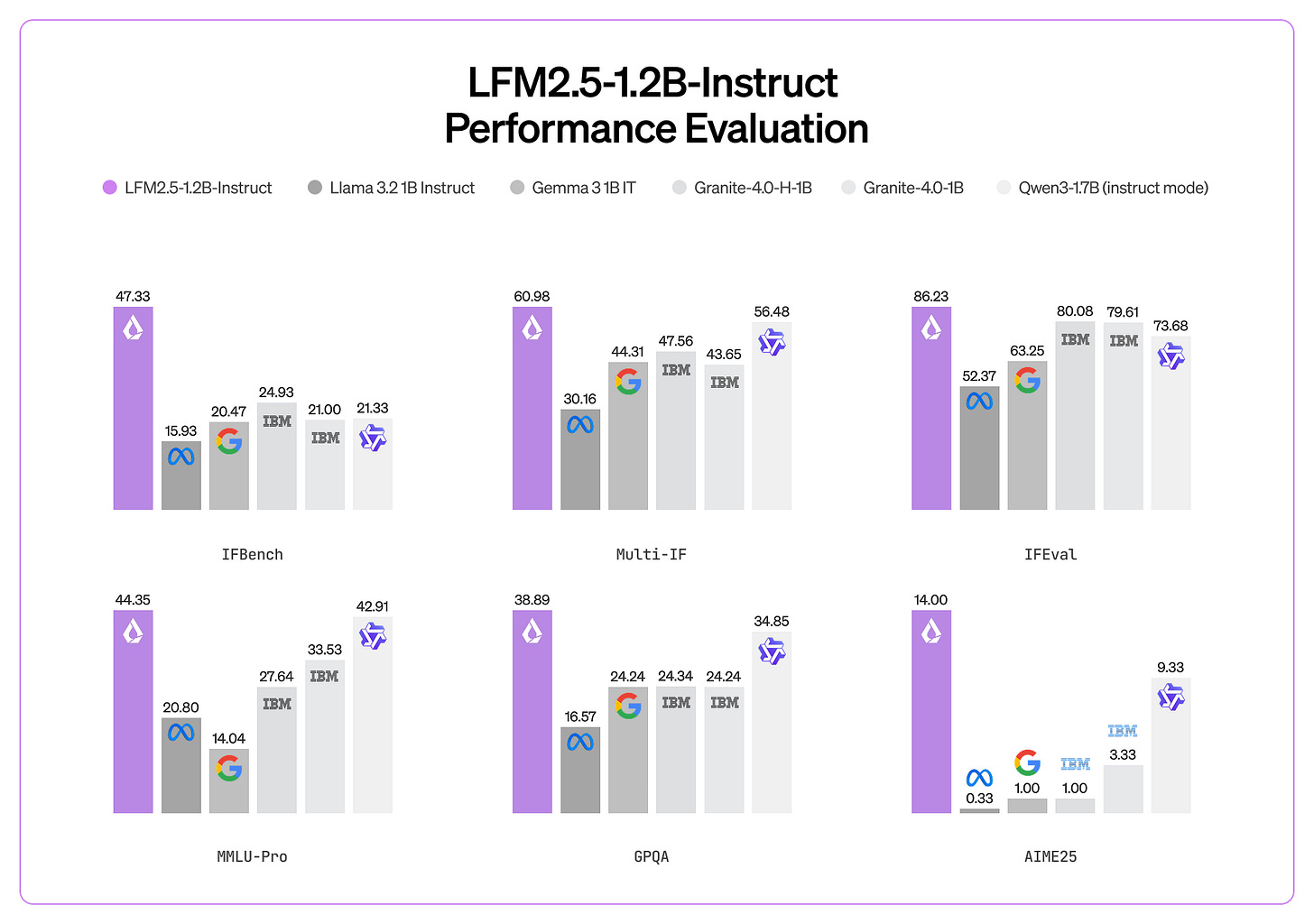

LFM2.5-1.2B-Instruct by LiquidAI: Liquid continued pretraining from 10T (of their 2.0 series) to 28T tokens and it shows! This model update really surprised us: In our vibe testing, it came very close to Qwen3 4B 2507 Instruct, which we use every day. And this model is over 3 times smaller! In a direct comparison against the (still bigger) Qwen3 1.6B, we preferred LFM2.5 basically every time. And this time, they released all the other variants at once, i.e., a Japanese version, a vision and an audio model.

Trinity-Large-Preview by arcee-ai: An ultra-sparse MoE with 400B total and 13B active parameters, trained by an American company. They also released a tech report and two base models, one “true” base model pre-annealing and the base model after the pre-training phase. Many more insights, including technical details and their motivation, can be found in our interview with the founders and pre-training lead:

Kimi-K2.5 by moonshotai: A continual pre-train on 15T tokens. Furthermore, this model is also multimodal! People on Twitter have replaced Claude 4.5 Opus with K2.5 for tasks that need a less capable but cheaper model. However, the writing capabilities that K2 and its successor were known for have suffered in favor of coding and agentic abilities.

GLM-4.7-Flash by zai-org: A smaller version of GLM-4.7 which comes in the same size as the small Qwen3 MoE with 30B total, 3B active parameters.

K2-Think-V2 by LLM360: A truly open reasoning model building on top of their previous line of models.

Models

Reading through the rest of this issue, we were impressed by the quality of the “niche” small models across the ecosystem. From OCR to embeddings and song-generation, this issue has some of everything and there really tends to be open models that excel at any modality needed today — they can just be hard to find!