Local LLMs, some facts some fiction

The deployment path that’ll break through in 2024. Plus, checking in on strategies across Big Tech and AI leaders.

Everyone knows local models, e.g. running large language models (LLMs) on consumer’s own hardware, is going to enable new ways of using the technology. Local LLMs can provide benefits beyond common themes like customization and information security. In the takeoff phase of technology like LLMs, new ways of using them often give you a chance at finding some new product market fit. Right now, it’s driven by process in quantization to lower the model footprint and high-throughput code for inference.

While the types of things that get the press are often hackers saying “If I can just run my Llama 7b on my fridge in addition to my Garmin watch, they can talk to each other and train each other with self-play for 42 years to generate 1million tokens.” In reality, local models matter almost entirely for their place in the strategies enabled by fundamentally different bottlenecks and scaling laws. Local models will win because they can solve some of the latency issues with LLMs.

When viewing the ChatGPT app communicating via audio, the optimization of latency looks like how can we reduce the inference time of our model, efficiently tune batch sizes for maximum compute utilization, reduce wireless communication times, try streaming tokens to the user rather than outputs in a batch after the end token, decide if you render audio in the cloud or on-device, and so on.

For local models, it’s a much simpler equation: how can I get maximum tokens per second out of my model and hook it up with a simple text-to-speech model? Apps like ChatGPT on the iPhone will be plagued by sandboxing core to iOS’s design. The reduced complexity of running locally, where an LLM can have an endpoint described within the operating system, removes many of the potential bottlenecks listed above. In the short term future, Android phones will likely have a Gemini model in this manner. Further in the future, consumer devices will have all of an LLM, a text-to-image model, audio models, and more. The primitives in the operating system that wins out in AI will expose all of these to developers. I’m excited.

The second core axis by which local models are more likely to be optimized for latency is that companies like OpenAI are trying to take their best model and make it fast enough for real-time audio, while companies and hackers looking on-device can invert the question. It’s an existential question for frontier model providers to follow that path — the capital costs and growth dictate their hand.

Others can ask: How do we train the best model that’s still small enough to have ~20-50ms latency? Latency for LLMs in the cloud like GPT4Turbo are still hovering around 100ms or more. If you’re talking with a robot, the difference between these two latencies is the difference between a viable product and an intriguing demo, full stop.

This is also backed up by the pure cost of hosting. In the last few months serving open GPT3.5 competitors like Mixtral has been commoditized. In this case, pushing it to local compute is the only way to win on a cost basis. See a detailed analysis of this on SemiAnalysis and more on Artificial Fintelligence for the race to zero in open model inference.

Local models are governed by much more practical scaling laws, those of power efficiency, batteries, and low upfront cost. Their counterparts in the hyper-scaler clouds are in the middle of an exponential increase in both upfront and inference cost, pointing at economies of scale that haven’t been proven as financially reliable. The hosted, open weight models getting driven to 0 inference cost are stuck in the middle. The LLMs that can run on an iPhone will be here to stay either way.

While most researchers are obsessed with tokens/second numbers or counting flops for training throughput, it’s pretty clear that latency will be the determining factor for do-or-die moments across LLM services in the next few years. While writing this, the clip of the new Rabbit r1 device taking 20 seconds to finish a request blew up on Twitter and TikTok.

Slow latency totally takes you out of the experience. It’s a churn machine.

For more on why ChatGPT with audio will be a compelling product, I recommend reading this piece from Ben Thompson.

The personalization myth

If I could run a faster version of ChatGPT directly integrated with my Mac, I would much rather use that than try and figure out how to download a model from HuggingFace, train it, and run it with some other software.

While local models are obviously better for letting you use the model of your exact choice, it seems like a red herring as to why local Llamas will matter for most users. Personalization desires of a small subset of engineers and hackers, such as the rockstars over in r/localllama, will drive research and developments in performance optimization. The optimizations for inference, quickly picked up by large tech companies like Apple and Google, will be quietly shifted into consumer products.

The other things, such as folks talking to their AI girlfriends for hours on end, will co-develop, but largely not be the vocalized focus of local model tool builders. As was the case with Facebook and the App Store, Apple will benefit from its ideological enemy. If Apple makes the most optimized local devices, everyone will buy them, even if the corporate values disagree with the application of use.

There will always be a population of people fine-tuning language models to run at home, much like there will always be a population of people jailbreaking iPhones. Most consumers will just go with the easy path of choosing a model of choice, selecting some basic in-context/inference time tuning, and enjoying the heck out of it.

In a few years, the local models in operating systems will have moderate fine-tuning and prompting options for personalization, but I expect there are still guardrails involved. If we’re going to benefit from the large amount of capital it takes to make these devices, it’s likely the most popular ones have what some people view as minor drawbacks. Local models will always be less restrictive than API endpoints, but I find it unlikely that moderation goes to zero when provided by risk-sensitive big tech companies.

There’ll be a fork in the optimizations available to different types of machines. Most of the local inference will happen on consumer devices like MacBooks and iPhones, which will never really be fully optimized for training performance. These performance-per-watt machines will have all sorts of wild hardware architectures around accelerating Transformer inference (and doing so power efficiently). I bet someone is already working on a GPU that has directly dedicated silicon for crucial inference speedups like KV caching.

The other machines, desktop gaming computers, will still be able to be rigged together for training purposes, but that is such a small component of the population that it won’t be a driving economic force. This group will have an outsized voice in the coming years due to their passion, but most of the ML labs serve larger ambitions.

Some basic resources on local models:

The Local Llama subreddit.

A recent Import AI covered local models.

Ollama, is a popular tool for playing with this stuff.

Meta’s local AGI and moats X factors

Last week, Zuck posted a reel bragging about Meta’s build-out of 350k Nvidia H100 GPUs through 2024. Zuck promised responsible, open AGI, Llama 3, and enjoyable metaverse Ray Bans all in one go.

For this reason, Meta is the most interesting big tech company to analyze AI strategies — because it’s the most open-ended.

All the noise Zuck is making with his impulse, post-workout selfie videos is about kneecapping his competition on their way to a new business model. The open-source strategy is about enabling easy access to technology so they’re less reliant on their competitors (and so their competitor’s customers look elsewhere). 350k H100s and all that is still well less than his competitors need to spend to realize their goals via scaling laws, and Meta can amortize this compute over tons of deep learning-driven products like video understanding or image generation for billions of users.

Some say that Meta is trying to use open source to catch up with the leading AI companies. I don’t think Meta has the incentive to capitalize here and eventually become a closed, leading AI company in the future (even though this is an outcome I would welcome gladly).

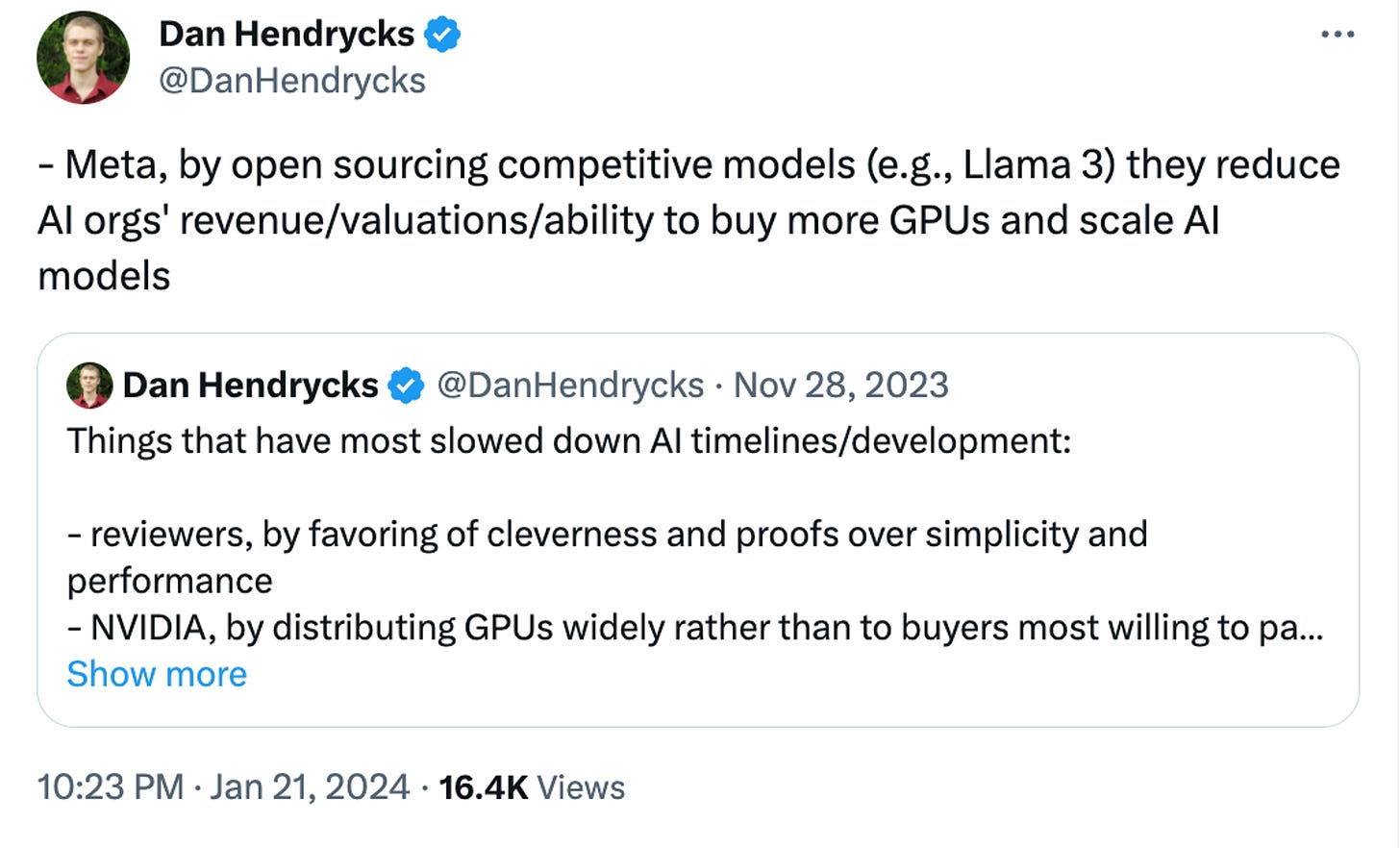

My favorite supporter of this theory, from a different worldview, is Dan Hendrycks of the Center for AI Safety.

The world of local models, which is downstream of open-weight LLMs, is why I still have to bet on Apple. It’s also why Meta and Apple are yet again tied at the hip. No company other than Apple has a better culture for trying to remove all the frills from an experience and make it fast, easy to use, and effective. It’ll take some major cultural changes to enable a hardware-accelerated local LLM API in apps, so they still have an Achilles heel, but it’s not yet a big enough one to ignore here.

Their peer in the specialized performance-per-watt hardware, Google (with their new Pixel TPU chip for Gemini Nano), will likely be the one that wakes them from their slumber. Google’s future in LLMs is up in the air. They have plenty of talent to do things, but it is not clear if the talent density is high enough or if the management has the capabilities to extract it. I still think Google will have their moment in the sun soon at the leading edge, and if not, they have a solid pivot to local awaiting them.

The other company with prevalent home devices is Amazon. While I don’t expect the Echo’s to pull this off, they still have one of the best form factors for verbal assistants. They better buy Anthropic quickly if they want to capitalize on this.

OpenAI is still hanging on comfortably because they have by far and away the best model and solid user habits. In 2024 the model rankings will be shaken up many times, so they can’t sit too pretty.

Audio of this post will be available later today on podcast players, for when you’re on the go, and YouTube, which I think is a better experience with the normal use of figures.

Looking for more content? Check out my podcast with Tom Gilbert, The Retort.

Newsletter stuff

Elsewhere from me

On episode 16 of The Retort, we caught up on two big themes: compute and evaluation.

Models & datasets

01 AI (the Chinese company) released their first visual language model (6B and 34B variants). Looks promising.

Stability released a new version of their small LM (1.6B)!

Snorkel released a 7B model (from Mistral Instruct v2) trained with a rejection-sampling like DPO method (like the new Meta paper on Self-rewarding LLMs). They say a blog post is coming soon, but I expect this type of training to take off in the next few months with the amount of early traction we’re seeing.

Links

HuggingFace released two new repositories for LLMs work. One on training parallelization and one on data processing (which is really similar to AI2’s Dolma repo).

AlphaGeometry, the new work from DeepMind solving math Olympiad problems, was accompanied by quite a good talk from one of the authors. It’s a cool explanation on how to do deductive trees for math with LLMs.

My former colleagues at HuggingFace released KTO vs IPO vs DPO comparisons. It’s a solid first step, but we have a lot more to learn as a community.

A cool new website for evaluating open and closed models on many axes prioritizing visualizations has been making the rounds. I will let you know if I use it.

A good post summarizing the anti openness positions of many AI Safety think tanks (and wow they’re many). I don’t necessarily agree with all the details / title, but this blog consistently has pretty good stuff.

Housekeeping

An invite to the paid subscriber-only Discord server is in email footers.

Interconnects referrals: You’ll accumulate a free paid sub if you use a referral link from the Interconnects Leaderboard.

Student discounts: Want a large paid student discount, go to the About page.

@Nathan: "The second core axis by which local models are more likely to be optimized for latency is that companies like OpenAI are trying to take their best model and make it fast enough for real-time audio, while companies and hackers looking on-device can invert the question. It’s an existential question for frontier model providers to follow that path — the capital costs and growth dictate their hand" - Can you clarify who are you calling as frontier model providers? those serving from the cloud like OpenAI or from local devices where a localized version resides

VJ