Breaking down the latest AI news with real-world frontier model experience

Interconnects is a newsletter covering the latest AI models, the methods used to train them, and the trajectory of the technology. 1 to 3 times a week you’ll receive a mix of essays, model reviews, interviews with researchers, and surveys of the open model ecosystem. Interconnects is founded and operated by Nathan Lambert — a senior research scientist and post-training lead at the Allen Institute for AI (Ai2). At the non-profit Ai2, Nathan trains open language models (Olmos) and shares cutting edge research. Interconnects serves his broader goals of creating a more informed AI ecosystem built on openness and transparency.

Interconnects’ value

Interconnects grew out of a longer history of Nathan trying to share educational resources in the AI space. Soon after the release of ChatGPT, Nathan transitioned a hobbyist approach to writing into a weekly commitment with over 300 posts to date. This is now deeply intertwined with his research practice and taste — trying to transfer the feeling of understanding a cutting edge research topic in AI to his readers.

Nathan is also known for a series of prominent research works in post-training language models, such as Olmo, Tülu 2 or 3, RewardBench, Zephyr-Beta, the Open LLM Leaderboard, Molmo, or the first textbook on RLHF. Core topics of Interconnects follow Nathan’s work closely, from early days on reinforcement learning from human feedback (RLHF) to the reasoning models of today, with a particular focus on open-source AI and open models. To understand Nathan’s approach to research, you can read more on his path into AI.

Many of the most popular pieces on Interconnects are frontier model reviews, such as recapping the DeepSeek R1 recipe or explaining o3’s odd over-optimization. For some more timeless pieces, consider exploring:

Why Reasoning Models will Generalize, Jan. 2025

Scaling Realities, Nov. 2024

AGI is What You Want it to Be, Apr. 2024

All essays and model reviews are voice-overed by Nathan and available in podcast feeds along with the interviews.

How to support Interconnects and its mission

The most valuable thing is to subscribe and spread the word with friends. On Substack, likes, comments, re-stacks, and any engagement meaningfully shift visibility.

To build on this, upgrading to a paid subscription pays for trial AI services — Nathan uses all the products costing $100s per month so you only need to buy those that are worthwhile — and supports a small team that help enable smooth operations of Interconnects while Nathan focus on my full-time research job. Paid subscribers get access to paywalled essays and monthly roundups of open models and datasets. In addition to this content, paid subscribers get access to:

The Interconnects Discord server, with 300+ members comprised of paying readers and Nathan’s network of researchers building frontier models and other AI systems.

Access to Interconnects Dinners & Events at leading AI conferences with leading researchers, founders, and investors.

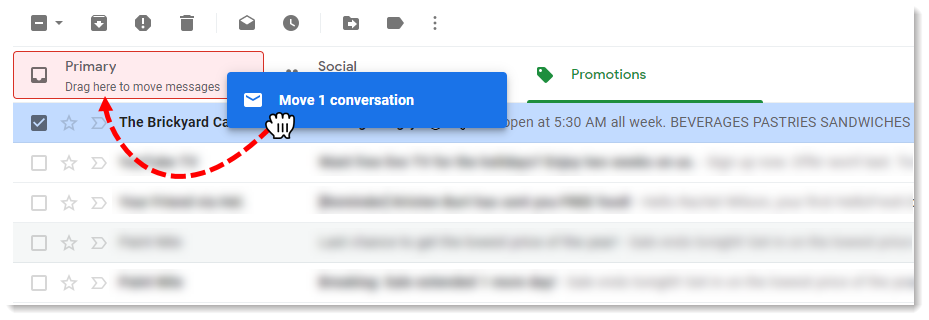

One piece of housekeeping — if my emails are landing in your “promotions”, “updates”, or “spam” folders in Gmail (or whatever email service you use), and you’d like to see them more often, please consider moving Interconnects to the “primary” folder instead! Just click and drag it like this:

A dialogue box will then show up asking “Conversation moved to Primary. Do this for future messages from Interconnects.ai” If you click “yes”, all future newsletters will be delivered directly to your main inbox, and won’t get lost among junk email.

Related projects

Nathan is building a coalition for truly open models in America with The ATOM Project.

Nathan is writing the first textbook on reinforcement learning from human feedback (RLHF) and its role in post-training.

Nathan continues to train leading open-source AI models at Ai2 under the Olmo, Molmo, and Tülu brands.

Testimonials

Interconnects (and Nathan’s work broadly) has received praise and recommendations from numerous prominent AI researchers. Some highlights, include…

John Schulman, Co-founder at Thinking Machines, Co-founder of OpenAI, fmr. ChatGPT lead, Anthropic:

Reinforcement learning from human feedback has exploded in popularity over the last few years since its pivotal role in ChatGPT. Nathan’s Interconnects blog is the best blog on this topic, and I’ve recommended it as further reading material in my talks.

Timothy B. Lee, Understanding AI:

Insightful and accessible commentary from a guy whose day job is actually training AI models.

Dean W. Ball, Hyperdimensional:

Leading insights on the state of open-source AI.

Follow Interconnects

Follow Nathan on X, BlueSky, Threads, Linkedin, YouTube, GitHub.

Get Interconnects on YouTube, Twitter, Linkedin, or Spotify.

Get in touch

For information on contacting Nathan, see his contact page. For Interconnects partnerships or opportunities, email mail at interconnects.ai.

If you want to propose a guest post, please email me a completed draft.

Interconnects AI is a newsletter owned and operated by Interconnects AI, LLC.

No LLMs used to write this

I only use LLMs for editing in the form of Grammarly for typo detection and a secondary full pass of Claude and/or ChatGPT (which does normally find interesting typos). LLMs are not used for prose or diagrams; just occasionally for title brainstorming.

Disclosures

I was a paid advisor to Tola Capital in 2024.

As part of paying for Eleven Labs API to generate my voiceovers, I enrolled in their referral program, which currently nets me $0 (try it here).

Otherwise, I have accepted no advertising dollars and pay for the services I use.