Google ships it: Gemma open LLMs and Gemini backlash

Google rejoins the open model party and gets some backlash for a frequent problem for generative AI.

On Wednesday, February 21st, Google announced their open-weight models from the Gemini line of work:

Gemma is a family of lightweight, state-of-the-art open models built from the same research and technology used to create the Gemini models. Developed by Google DeepMind and other teams across Google, Gemma is inspired by Gemini, and the name reflects the Latin gemma, meaning “precious stone.” Accompanying our model weights, we’re also releasing tools to support developer innovation, foster collaboration, and guide responsible use of Gemma models.

Gemma is pretty widely available for an initial launch. The weights of pretrained and fine-tuned models are on HuggingFace, it works in JAX, PyTorch, and TensorFlow, popular model training repositories, they gave us a “ready-to-use” Colab, and it comes with commercial-friendly terms of use (that has another issue we’ll get to).

Regardless, this model is great! It sets a new standard at 7 billion parameters, pretty steadily surpassing Mistral 7B. In summary:

4 model versions: 2 and 7B* variants of the base model and RLHF model (denoted -it). It’s really 8B parameters.

Open commercial weights: The weights are available commercially, but come with two terms that restrict model use — 1) no waifus and 2) requirement to update the model similar to the original RAIL license.

Weird architecture: many architecture choices are not standard and could make it harder for people to fine-tune the model (e.g. compatibility issues with FlashAttention 2).

Very likely the Gemini tokenizer: multi-lingual tokens and image tokens indicate this is very likely the Gemini tokenizer (it’s Llama-like). Releasing it isn’t a huge deal, tokenizers don’t offer a lot of gain but offer a lot of downside if you get them wrong.

Pretraining annealing: they end pretraining with “relevant, high-quality data” to improve performance. This largely boosts the base model evals but hasn’t been documented to help downstream alignment.

Alignment (partial) details: Google uses REINFORCE, a larger reward model, and the InstructGPT SFT KL penalty.

Extra resources: Collection on HF with all weights, blog post, and a technical report.

At the same time, Google has been dealing with backlash to the image generations from its flagship model series, Gemini. The title from The Verge tells most of the story: Google apologizes for ‘missing the mark’ after Gemini generated racially diverse Nazis. In short, much like DALLE 2 in recent years, Google got backlash for too strongly adding bias corrections to their model. In this case, talking with many researchers on the ground, it is that multimodal RLHF and safety fine-tuning is much harder than a single modality, so I expect this to be more of a blip than a major story. I’ll still cover what it means later in the post, but for now, we start with Gemma.

Getting to know Gemma

Gemma takes the next step on the LLaMA 7B to Llama 2 to Mistral progression of 7 billion-ish parameter models. The core model evaluations are really strong across a wide range of tasks (including coding) and I suspect a ton of fine-tuned models in the coming months are based on it.

Most of this post will focus on the 7B model (and its aligned variant), which is also clearly more of an investment from them based on compute spend. The 7B model is trained for 6 trillion tokens, getting close to the rumored 8 trillion of Mistral 7B, while the smaller 2B parameter base model is only trained for 2 trillion tokens. The 2T model also has more of a mixed bag of results when compared to the known-to-be evaluation fishing Phi 2 model. The core point of my most recent post on open base models, OLMos, is the need to compare models weighed by parameters and token count. Llama 2 is only trained on 2T tokens, OLMo 7B gets close to it by training on 2.5, and it makes sense that Mistral and Gemma are way better with 6-8T tokens.

While the pace in progress of base LLMs on the data and architecture side is high (architecture other than the benefits of mixture of experts is mostly about optimizing hardware and training stability), it’s not worth it to spend more time training a base model. In a few years, we’ll see models are every major size checkpoint easily trained on twice as many tokens as we’re seeing now. By the end of the year, 1B to 7B models on 10T tokens will likely happen, and 20 or 30T isn’t ridiculous in the long timeframes. It makes comparing models harder, but it’s the next evolution of scaling laws.

One technicality that I need to add before showing the evaluations is that it’s more of 8B language model with .7B embedding and 7.75B non-embedding (total well over 8B) parameters, but they wanted to ride the comparison wave where everyone trains on 7B parameters. 7B is the people’s model size. It is accessible to many to fine-tune and accessible to almost everyone for inference.

The architecture that Google used to get here is at best nontraditional. The details are all in the report (or the model weights / inference code) and are hard to draw implications from individuals, so I don’t focus on them too much here. You can read some discussions on X | here. The sort of things discussed are the huge vocabulary in the tokenizer (which increases embedding dimensions in attention operations) and the head dimension is too big for flash attention on consumer GPUs (which was then updated day-off by Tri Dao, legend).

It’s also all but confirmed officially that Gemma uses Gemini’s tokenizer, due to things like image or multilingual token handling being included, even though Gemma is trained on English and has no multimodality. For more on the tokenizer, which is more likely to cause issues when it’s wrong than to drastically improve open models, I recommend this post from Karpathy.

One of the more interesting technical details that folks picked up on is the annealing of pretraining with higher-quality data at the end. The Gemma paper notes:

Similar to the approach advocated in Gemini, we stage training to alter the corpus mixture throughout training to increase the weight of relevant, high-quality data towards the end of training.

The evidence I’ve seen in the literature and some of our own experiments is that most of this effect serves to boost the scores of the base model rather than deliver improvements to the model post-alignment. It’s pretty likely that Llama 2 and Mistral did this to some capacity with the information we currently have. One example in the literature mentioning this is from the DeepSeek LM paper:

We observed that the base model did exhibit improved performance on the benchmark. However, the final outcomes were nearly identical to those achieved by adding the same data during the SFT stage. We conclude that while this approach strengthens the base model’s performance on the benchmark, its overall potential is equivalent to not incorporating these instruction data.

Is Meta now in third when it comes to open models? So much has happened since Llama 2.

Alignment details

While the report is light on most technical details, it did confirm a few rumors that I’ve been hearing about reinforcement learning from human feedback (RLHF) practices at Google, which are really great to see confirmed.

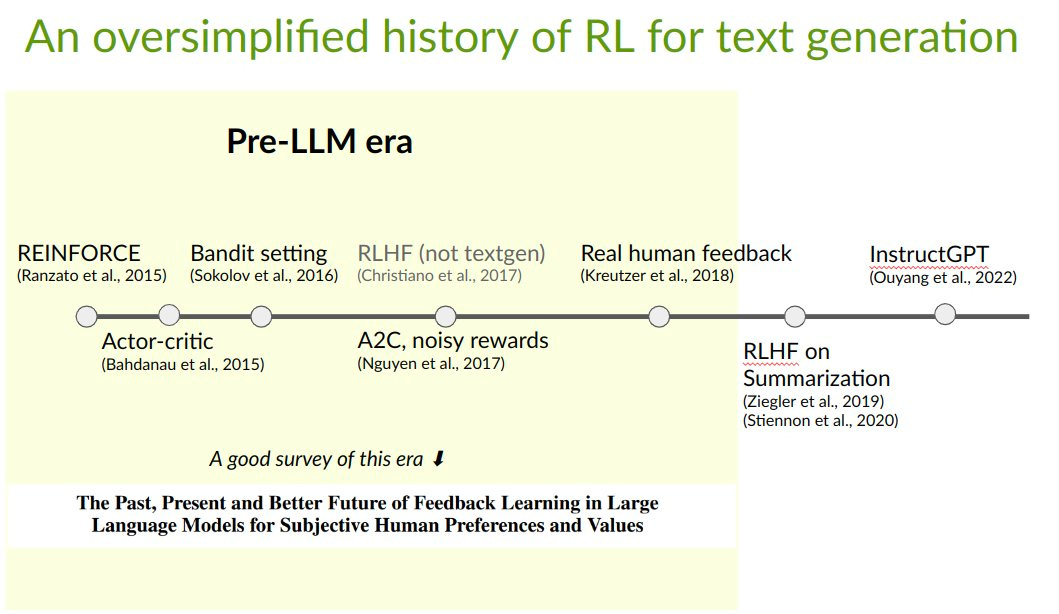

First, it is confirmed that Google has been using the REINFORCE (vanilla policy gradient) algorithm for RLHF. This is a much simpler algorithm to implement, and walking the clock back on RL practices from the control world continues.

Google uses a KL penalty for the instruction-tuning distribution in the reward function, similar to InstructGPT. Traditional RLHF pipelines include KL penalties in two other places. First, in algorithms like Proximal Policy Optimization (PPO), KL penalties are used to constrain update steps. Second, a KL penalty is often used in the reward function to make sure a policy doesn’t change too much from its initial state. This third option is constraining the policy updates to stay close to a gold set of human annotations.

Finally, Google said that they "relied on a high capacity model" during the RLHF process. I’ve heard for a long time that big reward models are better, given the ability of larger language models to manage nuance in text. I’m starting to see this in the reward model evaluation suite I’m building, but it could be another popular cost saving tool: use a large reward model to incur more small gains on a model you’ll be using repeatedly for inference.

The best part of being an alignment researcher and analyst is that companies are generally more okay with sharing details here for two reasons — 1) it’s deeply coupled with safety, so they feel a need to be transparent, and 2) the methods are evolving rapidly, so lower cost to sharing some research.

What is REINFORCE? Some history of RL

The algorithm known as REINFORCE is really just the vanilla policy gradient approach. The name comes from Williams 1992, "Simple Statistical Gradient-Following Algorithms for Connectionist Reinforcement Learning."

Policy gradient algorithms directly update the weights of the policy based on some estimate of the reward to go. Mostly, it's important to know how PPO compares to other methods and why it emerged, and why that may not matter for RLHF. REINFORCE methods were known for high variance on the policy gradients, leading to unstable learning in some complex control tasks. Basic methods like baselining and other regularization tools (off-policy gradients, actor-critic methods, etc) all emerged.

The notable paper on the path towards Proximal Policy Optimization (PPO) from REINFORCE, which lots of people use for RLHF today, was Trust Region Policy Optimization (TRPO). It turns out that PPO with some hyperparameter choices reduces to REINFORCE.

Both TRPO and PPO try to answer the same question: How do we take the right-sized step with a potentially noisy policy gradient estimate? TRPO does this with a second-order approximation of the gradient. PPO does this with a first-order approximation. It ended up being much simpler to implement, and its popularity is now obvious.

We'll see if we as a field go down the same paths of off-policy algorithms being useful for RLHF (much more data to learn from) and actor-critic algorithms (separate learning of value function and policy). The things that were important for state-based control may not be important for language because our reward functions are very different from a reward model. Google may need extremely good reward models to get REINFORCE to work, as they mentioned in the Gemma paper.

Regardless, a big way around high variance gradients is to take big batch sizes. We know Google has the compute for that, but we're not sure all of us DPO hackers do.

For more on policy gradient methods, see | these | links.

To wrap this section up, here’s a visual for the emerging history of using RL for text generation in recent years from Khanh Nguyen (click through for arxiv links):

Implementation details and RLHF

The new phase of RL we’re in for language needs entirely new implementation details and hacks. The popular papers and blog posts on implementation details for PPO are now just a starting point rather than a source of truth. RLHF is a fine-tuning method whereas the previous methods of using RL were almost all learning from scratch. The distributional distance covered is extremely different, and the representation in the base models for RLHF largely doesn’t need to change much. RLHF in language is a much gentler learning method than was needed when RL was used for control.

That is why returning to algorithms like REINFORCE and PPO makes sense. These are simple enough algorithms that people then can modify them again and create a new literature of RL algorithms for fine-tuning (such as the N implementation details of RLHF with PPO). While this may be obvious, there are still RLHF libraries in the open that are touting their implementation of the last generation of hacks. This can be a starting point, but goes to show how far behind open RLHF literature is.

Terms of use: RAIL Licenses history repeated

The Gemma model is mostly fine in its terms of service — researchers and folks building products won’t really mind complying. There are a few key terms to cover. Starting with the good one, Google doesn’t own the outputs:

Generated Output. Google claims no rights in Outputs you generate using Gemma. You and your users are solely responsible for Outputs and their subsequent uses.

On the other hand, there are strict rules on the use (No Waifu’s), and there’s a weird claim about “trying to update the model.” First, the prohibited use clause:

Prohibited use: Generate sexually explicit content, including content created for the purposes of pornography or sexual gratification (e.g. sexual chatbots). Note that this does not include content created for scientific, educational, documentary, or artistic purposes.

The update clause comes from the Responsible AI License (RAIL) developed as part of the BigScience working group.

Updates. Google may update Gemma from time to time, and you must make reasonable efforts to use the latest version of Gemma.

However, many popular models like StarCoder that nominally are released under the RAIL license now use a modified version that removes this clause because of the backlash it received from more open-source-centric developers. It’s a small point, but expect to see some discussion on it.

Some history that was not repeated is Google PR washing their release with the term open-source everywhere. They were careful in their branding this as an open model, or what I prefer, open weights release. We’re making some progress, but have a lot more work to do to clarify what is a terms-of-service vs. license when it comes to the new type of software that is machine learning artifacts.

Is Google back on top? Gemini’s woes

These top model players also have different types of exposure risk by serving their models at scale. The field is charging ahead with products that encompass areas where research has never operated. Google is joining the “ship it” mentality, and it’s good for the field. It’s expected that there will be some bumps.