State of play of AI progress (and related brakes on an intelligence explosion)

Why I don't think AI 2027 is going to come true.

Intelligence explosions are far from a new idea in the technological discourse. They’re a natural thought experiment that follows from the question: What if progress keeps going?

From Wikipedia:

The technological singularity—or simply the singularity—is a hypothetical point in time at which technological growth becomes uncontrollable and irreversible, resulting in unforeseeable consequences for human civilization. According to the most popular version of the singularity hypothesis, I. J. Good's intelligence explosion model of 1965, an upgradable intelligent agent could eventually enter a positive feedback loop of successive self-improvement cycles; more intelligent generations would appear more and more rapidly, causing a rapid increase ("explosion") in intelligence which would culminate in a powerful superintelligence, far surpassing all human intelligence.

Given the recent progress in AI, it’s understandable to revisit these ideas. With the local constraints governing decisions within labs, if you extrapolate them, the natural conclusion is an explosion.

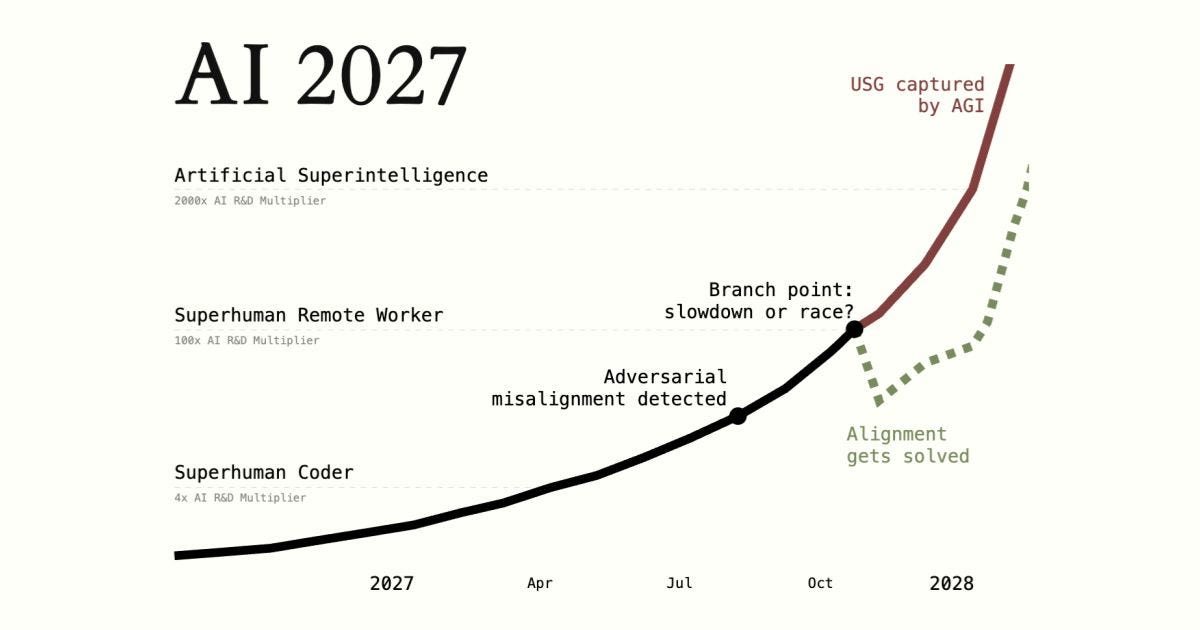

Daniel Kokotajlo et al.’s AI 2027 forecast is far from a simple forecast of what happens without constraints. It’s a well thought out exercise on forecasting that rests on a few key assumptions of AI research progress accelerating due to improvements in extremely strong coding agents that mature into research agents with better experimental understanding. The core idea here is that these stronger AI models enable AI progress to change from 2x speed all the way up to 100x speed in the next few years. This number includes experiment time — i.e., the time to train the AIs — not just implementation time.

This is very unlikely. This forecast came at a good time for a summary of many ways the AI industry is evolving. What does it mean for AI as a technology to mature? How is AI research changing? What can we expect in a few years?

In summary, AI is getting more robust in areas we know it can work, and we’re consistently finding a few new domains of value where it can work extremely well. There are no signs that language model capabilities are on an arc similar to something like AlphaGo, where reinforcement learning in a narrow domain creates an intelligence way stronger than any human analog.

This post has the following sections:

How labs make progress on evaluations,

Current AI is broad, not narrow intelligence,

Data research is the foundation of algorithmic AI progress,

Over-optimism of RL training,

In many ways, this is more a critique of the AGI discourse generally, inspired by AI 2027, rather than a critique specifically of their forecast.

In this post, there will be many technical discussions of rapid, or even accelerating, AI research progress. Much of this falls into a technocentric world view where technical skill and capacity drive progress, but in reality, the biggest thing driving progress in 2025 is likely steep industrial competition (or international competition!). AI development and companies are still a very human problem and competition is the most proven catalyst of performance.

See AI 2027 in its entirety, Scott Alexander’s reflections, their rebuttal to critiques that AI 2027 was ignoring China, Zvi’s roundup of discussions, or their appearance on the Dwarkesh Podcast. They definitely did much more editing and cohesiveness checks than I did on this response!

1. How labs make progress on evaluations

One of the hardest things to communicate in AI is talking down the various interpretations of evaluation progress looking vertical over time. If the evals are going from 0 to 1 in one year, doesn’t that indicate the AI models are getting better at everything super fast? No, this is all about how evaluations are scoped as “reasonable” in AI development over time.

None of the popular evaluations, such as MMLU, GPQA, MATH, SWE-Bench, etc., that are getting released in a paper and then solved 18 months later are truly held out by the laboratories. They’re training goals. If these evaluations were unseen tests and going vertical, you should be much more optimistic about AI progress, but they aren’t.

Consider a recent evaluation, like Frontier Math or Humanity’s Last Exam. These evaluations are introduced with a performance of about 0-5% on leading models. Soon after the release, new models that could include data formatted for them are scoring above 20% (e.g. o3 and Gemini 2.5 Pro). This evaluation will continue to be the target of leading labs, and many researchers will work on improving performance directly.

With these modern evaluations, they can become increasingly esoteric and hard for the sake of being hard. When will a power user of ChatGPT benefit from a model that solves extremely abstract math problems? Unlikely.

The story above could make more sense for something like MATH, which are hard but not impossible math questions. In the early 2020s, this was extremely hard for language models, but a few clicks of scaling made accurate mathematics a reasonable task, and laboratories quickly added similar techniques to the training data.

So this is how you end up with the plot from Epoch AI below — AI researchers figure out that a new evaluation is fair game for hill climbing with current techniques, and then they go all in on it.

Or the analogous version that can look even more shocking — the price falling for certain evaluations. This is from 2 factors — laboratories getting better and better at core abilities in certain evaluations and language model training getting far more efficient. Neither of these means that intelligence is rocketing. This is a normal technological process — extreme efficiency at tasks we know we can do well.

In fact it is a common job at AI laboratories to make new data that looks very close to population evaluations. These laboratories can’t train on the test set directly for basic reasons of scientific integrity, but they can pay thousands to millions of dollars for new training data that looks practically identical. This is a very common practice and makes the hillclimbing on evaluations far less extraordinary.

AI capabilities in domains we are measuring aren't accelerating, they’re continuing. At the same time, AI’s abilities are expanding outwards into new domains. AI researchers solve domains when we focus on them, not really by accident. Generalization happens sometimes, but it is messy to track and argue for.

As the price of scaling kicks in, every subsequent task is getting more expensive to solve. The best benchmarks we have are correlated with real, valuable tasks, but many are not.

2. Current AI is broad, not narrow intelligence

Instead of thinking of stacking rapid evaluation progress on one line in a cumulative, rapid improvement in intelligence, the above plots should make one think that AI is getting better at many tasks, rather than being superhuman in narrow tasks.

In a few years, we’ll look back and see that AI is now 95% robust on a lot of things that only worked 1-5% of the time today. A bunch of new use cases will surprise us as well. We won’t see AI systems that are so intelligent that they cause seismic shifts in the nature of certain domains. Software will still be software. AI will be way better than us at completing a code task and finding a bug, but the stacks we are working on will be largely subject to the same constraints.

Epoch AI had a very complementary post to this view.

There are many explanations for why this will be the case. All of them rely on the complexity of the environment we are operating modern AI in being too high relative to the signal for improvement. The AI systems that furthest exceeded human performance in one domain were trained in environments where those domains were the entire world. AlphaGo is the perfect rendition of this.

AI research, software engineering, information synthesis, and all of the techniques needed to train a good AI model are not closed systems with simple forms of verification. Some parts of training AI systems are, such as wanting the loss to go down or getting more training tokens through your model, but those aren’t really the limiting factors right now on training.

The Wikipedia page for the singularity has another explanation for this that seems prescient as we open the floodgates to try and apply AI agents to every digital task. Paul Allen thought the deceleratory effects of complexity would be too strong:

Microsoft co-founder Paul Allen argued the opposite of accelerating returns, the complexity brake: the more progress science makes towards understanding intelligence, the more difficult it becomes to make additional progress. A study of the number of patents shows that human creativity does not show accelerating returns, but in fact, as suggested by Joseph Tainter in his The Collapse of Complex Societies, a law of diminishing returns. The number of patents per thousand peaked in the period from 1850 to 1900, and has been declining since. The growth of complexity eventually becomes self-limiting, and leads to a widespread "general systems collapse".

This may be a bit of an extreme case to tell a story, but it is worth considering.

Language models like o3 use a more complex system of tools to gain performance. GPT-4 was just a set of weights to answer every query; now ChatGPT also needs search, code execution, and memory. The more layers there are, the smaller the magnitude of changes we’ll see.

This, of course, needs to be controlled for with inference costs as a constant. We still have many problems in AI that will be “solved” simply by us using 1,000X the inference compute on them.

3. Data research is the foundation of algorithmic AI progress

One of the main points of the AI 2027 forecast is that AI research is going to get 2X, then 4X, then 100X, and finally 1,000X as productive as it is today. This is based on end-to-end time for integrating new ideas into models and misinterprets the reality of what machine learning research is bottlenecked on. Scaling is getting more expensive. We don’t know what paradigm will come after reasoning for inference-time compute.

For machine learning research to accelerate at these rates, it needs to be entirely bottlenecked by compute efficiency and implementation difficulty. Problems like getting the maximum theoretical FLOPs out of Nvidia GPUs and making the loss go as low as possible. These are things that people are currently doing and represent an important area of marginal gains in AI progress in recent years.

ML research is far messier. It is far more reliant on poking around the data, building intuitions, and launching yolo runs based on lingering feelings. AI models in the near future could easily launch yolo runs if we give them the compute, but they’re not using the same motivation for them. AI systems are going towards rapid cycles of trial and error to optimize very narrow signals. These narrow signals, like loss or evaluation scores, mirror very closely to the RL scores that current models are trained on.

These types of improvements are crucial for making the model a bit better, but they are not the type of idea that gets someone to try to train GPT-3 in the first place or scale up RL to get something like o1.

A very popular question in the AI discourse today is “Why doesn’t AI make any discoveries despite having all of human knowledge?” (more here). Quoting Dwarkesh Patel’s interview with Dario Amodei:

One question I had for you while we were talking about the intelligence stuff was, as a scientist yourself, what do you make of the fact that these things have basically the entire corpus of human knowledge memorized and they haven't been able to make a single new connection that has led to a discovery?

The same applies to AI research. Models getting better and better at solving coding problems does not seem like the type of training that would enable this. We’re making our models better at the tasks that we know. This process is just as likely to narrow the total capabilities of the models as it is to magically instill impressive capabilities like scientific perspective.

As we discussed earlier in this piece, emergence isn’t magic, it’s a numerical phenomenon of evaluations being solved very quickly. AI research will get easier and go faster, but we aren’t heading for a doom loop.

The increased computing power AI researchers are getting their hands on is, for the time being, maintaining the pace of progress. As compute gets more expensive, maybe superhuman coding capabilities will continue to enable another few years of rapid progress, but eventually, saturation will come. Current progress is too correlated with increased compute to believe that this will be a self-fulfilling feedback loop.

There’s a saying in machine learning research, that the same few ideas are repeated over and over again. Here’s an extended version of this that leans in and says that there are no new ideas in machine learning, just new datasets:

The data problem is not something AI is going to have an easy time with.

One of the examples here is in post-training. We’ve been using the same loss functions forever, and we are hill-climbing rapidly by clever use of distillation from bigger, stronger models. The industry standard is that post-training is messy and involves incrementally training (and maybe merging) many checkpoints to slowly interweave new capabilities for the model. It’s easy to get that wrong, as we’ve seen with the recent GPT-4o sycophancy crisis, and lose the narrow band of good vibes for a model. I doubt AI supervision can monitor vibes like this.

For example, in Tülu 3 we found that a small dataset of synthetic instruction following data had a second-order effect that improves the overall performance in things like math and reasoning as well. This is not a hill that can be climbed on, but rather a lucky find.

AI research is still very messy and does not look like LeetCode problems or simple optimization hillclimbing. The key is always the data, and how good are language models at judging between different responses — not much better than humans.

4. Over-optimism of RL training

A lot of people are really excited for RL training right now scaling up further, which will inevitably involve extending to more domains. Some of the most repeated ideas are adding RL training to continually fine-tune the model in real-world scenarios, including everything from web tasks to robotics and scientific experiments. There are two separate problems here:

Continually training language models to add new capabilities to models “in flight” in production is not a solved problem,

Training models to take actions in many domains.

The first problem is something that I’m confident we’ll solve. It’s likely technically feasible now that RL is the final stage of post-training and is becoming far more stable. The challenge with it is more of a release and control problem, where a model being trained in-flight doesn’t have time for the usual safety training. This is something the industry can easily adapt to, and we will as traditional pretraining scaling saturates completely.

The second issue is putting us right back into the territory of why projects on scaling robotics or RL agents to multiple domains are hard. Even the most breakthrough works like GATO, multi-domain RL control, or RT-X, multi-robot control policies, from DeepMind have major caveats with their obvious successes.

Building AI models that control multiple real-world systems is incredibly hard for many reasons, some of which involve:

Different action spaces across domains mandate either modifying the domain to suit the underlying policy, which in this case is converting all control tasks to language, or modifying the model to be able to output more types of tokens.

The real-world is subject to constant drift, so the constant fine-tuning of the model will need to do as much to just maintain performance on systems with real degradation as it will need to learn to use them in the first place.

This sort of scaling RL to new types of domains is going to look much more like recent progress in robotics research rather than the takeoff pace of reasoning language models. Robotics progress is a slow grind and feels so different that it is hard to describe concisely. Robotics faces far more problems due to the nature of the environment rather than just the learning.1

The current phase of RL training is suited for making the models capable of performing inference-time scaling on domains they have seen at pretraining. Using these new RL stacks to learn entirely new, out-of-domain problems is a new research area.

If this is the next paradigm outside of inference-time scaling, I will be shocked, but obviously excited. We don’t have the evidence to suggest that it will do so. The RL training we’re going to get is continuing to hill climb on search and code execution, giving us Deep Research plus plus, not an omnipotent action-taking model.

A world with compute shifting to inference

While the AI research world is dynamic, engaging, and rapidly moving forward, some signs of the above being correct could already be emerging. A basic sign for this future coming true will be the share of compute spent on research decreasing relative to inference amid the rapid buildout. If extremely rapid AI progress were available for organizations that put in marginally more compute, serving inference would be a far lower priority. If investing in research has a positive feedback loop on your potential business revenue, they’d all need to do it.

For example, consider our discussion of Meta’s compute allocation on Dylan and I’s appearance on the Lex Podcast:

(01:03:56) And forever, training will always be a portion of the total compute. We mentioned Meta’s 400,000 GPUs. Only 16,000 made Llama 3.

OpenAI is already making allocation trade-offs on their products, regularly complaining about GPUs melting. Part of the reason they, or anyone, could release an open-weights model is to reduce their inference demand. Make the user(s) pay for the compute.

Part of the U.S.’s economic strength is a strong services sector. AI is enabling that, and the more it succeeds there, the more companies will need to continue to enable it with compute.

With the changing world economic order, cases like Microsoft freezing datacenter buildouts are correlated indicators. Microsoft’s buildout is correlated with many factors, only one of which is potential training progress, so it’s far from a sure thing.

In reality, with the large sums of capital at play, it is unlikely that labs give free rein to billions of dollars of compute to so called “AI researchers in the datacenter” because of how constrained compute is at all of the top labs. Most of that compute goes to hillclimbing on fairly known gains for the next model! AI research with AI aid will be a hand-in-hand process and not an autonomous take-off, at least on a timeline for a few years in the future.

AI will make a ton of progress, but it will not be an obvious acceleration. With traditional pretraining saturating, it could even be argued that after the initial gains of inference time compute, research is actually decelerating, but it will take years to know for sure.

Comments on this post are open to everyone, please discuss!

Thanks to Steve Newman and Florian Brand for some early feedback on this post and many others in the Interconnects Discord for discussions that helped formulate it.

Some of my recent coverage on robotics is here:

As someone who thinks a rapid (software-only) intelligence explosion is likely, I thought I would respond to this post and try to make the case in favor. I tend to think that AI 2027 is a quite aggressive, but plausible scenario.

---

I interpret the core argument in AI 2027 as:

- We're on track to build AIs which can fully automate research engineering in a few years. (Or at least, this is plausible, like >20%.) AI 2027 calls this level of AI "superhuman coder". (Argument: https://ai-2027.com/research/timelines-forecast)

- Superhuman coders will ~5x accelerate AI R&D because ultra fast and cheap superhuman research engineers would be very helpful. (Argument: https://ai-2027.com/research/takeoff-forecast#sc-would-5x-ai-randd).

- Once you have superhuman coders, unassisted humans would only take a moderate number of years to make AIs which can automate all of AI research ("superhuman AI researcher"), like maybe 5 years. (Argument: https://ai-2027.com/research/takeoff-forecast#human-only-timeline-from-sc-to-sar). And, because of AI R&D acceleration along the way, this will actually happen much faster.

- Superhuman AI researchers can obsolete and outcompete humans at all AI research. This includes messy data research, discovering new paradigms, and whatever humans might be doing which is important. These AIs will also be substantially faster and cheaper than humans, like fast and cheap enough that with 1/5 of our compute we can run 200k parallel copies each at 40x speed (for ~8 million parallel worker equivalents). Because labor is a key input into AI R&D, these AIs will be able to speed things up by ~25x. (Argument: https://ai-2027.com/research/takeoff-forecast#sar-would-25x-ai-randd)

- These speed ups don't stop just above the human range, they continue substantially beyond the human range, allowing for quickly yielding AIs which are much more capable than humans. (AI 2027 doesn't really argue for this, but you can see here: https://ai-2027.com/research/takeoff-forecast#siar-would-250x-ai-randd)

(There are a few other minor components like interpolating the AI R&D multipliers between these milestones and the argument for being able to get these systems to run at effective speeds which are much higher than humans. It's worth noting that these numbers are more aggressive than my actual median view, especially on the timeline to superhuman coder.)

---

Ok, now what is your counterargument?

Sections (1) and (2) don't seem in conflict with the AI 2027 view or the possibility of a software-only intelligence explosion. (I'm not claiming you intended these to be in conflict, just clarifying why I'm not responding. I assume you have these sections as relevant context for later arguments.)

As far as I can tell, section (3) basically says "You won't get large acceleration just via automating implementation and making things more compute efficient. Also, ML research is messy and thus it will be hard to get AI systems which can actually fully automate this."

AI 2027 basically agrees with these literal words:

- The acceleration from superhuman coders which fully automate research engineering (and run at high speeds) is "only" 5x, and to get the higher 25x (or beyond) acceleration, the AIs needed to be as good as the best ML researchers and fully automate ML research.

- AI 2027 thinks it would take ~5 years of unaccelerated human AI R&D progress to get from superhuman coder to superhuman AI researcher, so the forecast incorporates this being pretty hard.

There is presumably a quantitative disagreement: you probably think that the acceleration from superhuman coders is lower and the unassisted research time to go from superhuman coders to superhuman AI researchers is longer (much more than 5 years?).

(FWIW, I think AI 2027 is probably too bullish on the superhuman coder AI R&D multiplier, I expect more like 3x.)

I'll break this down a bit further.

Importantly, I think the claim:

> For machine learning research to accelerate at these rates, it needs to be entirely bottlenecked by compute efficiency and implementation difficulty.

Is probably missing the point: AI 2027 is claiming that we'll get these large multipliers by being able to make AIs which beat humans at the overall task of AI research! So, it just needs to be the case that machine learning research could be greatly accelerated by much faster (and eventually very superhuman) labor. I think the extent of this acceleration and the returns are an open question, but I don't think whether it is entirely bottlenecked by compute efficiency and implementation difficulty is the crux. (The extent to which compute efficiency and implementation difficulty bottleneck AI R&D is the most important factor for the superhuman coder acceleration, but not the acceleration beyond this.)

As far as the difficulty of making a superhuman AI researcher, I think your implicit vibes are something like "we can make AIs better and better at coding (or other easily checkable tasks), but this doesn't transfer to good research taste and intuitions". I think the exact quantitative difficulty of humans making AIs which can automate ML research is certainly an open question (and it would be great to get an overall better understanding), but I think there are good reasons to expect the time frame for unassisted humans (given a fixed compute stock) to be more like 3-10 years than like 30 years:

- ML went from AlexNet to GPT-4 in 10 years! Fast progress has happened historically. To be clear, this was substantially driven by compute scaling (for both training runs and experiments), but nonetheless, research progress can be fast. The gap from superhuman coder to superhuman AI research intuitively feels like a much smaller gap than the gap from AlexNet to GPT-4.

- For success on harder to check tasks, there are hopes for both extending our ability to check and generalization. See [this blog post for more discussion](https://helentoner.substack.com/p/2-big-questions-for-ai-progress-in). Concretely, I think we can measure whether AIs produce some research insight that ended up improving performance and so we can RL on this, at least at small scale (which might transfer). We can also train on things like forecasting the results of training runs etc. Beyond this, we might just be able to get AIs to generalize well: many of current AI capabilities are due to reasonably far generalization and I expect this to continue.

---

As far as section (4), I think the core argument is "solving real-world domains (e.g., things which aren't software only) will be hard". You might also be arguing that RL will struggle to teach AIs to be good ML researchers (it's a bit hard for me to tell how applicable your argument is to this).

I think the biggest response to the concern with real world domains is "the superhuman AI researchers can figure this out even if it would have taken humans a while". As far as RL struggling to teach AIs to be good scientists (really ML researchers in particular), I think this is addressed above, though I agree this is a big unknown. Note that we can (and will) explore approaches that differ some from the current RL paradigm and acceleration from superhuman coders might make this happen a bunch faster.

---

> In reality, with the large sums of capital at play, it is unlikely that labs give free rein to billions of dollars of compute to so called "AI researchers in the datacenter" because of how constrained compute is at all of the top labs.

I find this argument somewhat wild if you assume that the AI researchers in the datacenter are making extremely fast progress. Insofar as the software only singularity works, the AI researchers in the datacenter can rapidly improve efficiency using algorithmic progress saving far more compute than you spent to run them. Currently, inference costs for a given level of performance drop by around 9-40x per year depending on the exact task. (See the graph you included.) So, if AIs can make the progress happen much faster, it will easily pay for itself on inference costs alone. That said, I don't expect this is the largest source of returns...

There will be diminishing returns, but if the software only singularity ends up being viable, then these diminishing returns will be outpaced by ever more effective and smarter AI researchers in the datacenter meaning progress wouldn't slow until quite high limits are reached.

I assume your actual crux is about the returns to AI R&D at the point of full (or substantial) automation by AIs. You think the returns are low enough that companies will (rationally) invest elsewhere.

I agree that the fact that AI companies aren't investing all their compute into AI R&D is some update about the returns to algorithms progress, but the regime might differ substantially after full automation by AI researchers!

(And, I think the world would probably be better if AI companies all avoid doing a rapid intelligence explosion internally!)

Minimally, insofar as AI companies are racing as fast as possible, we'd expect that whatever route the companies take is the one they think is fastest! So, if there is another route than AI accelerating AI R&D which is faster, fair enough companies would do this instead, but if the AI automating AI R&D story is insanely fast, then this route must be even faster.

The world is way too complicated for any one paradigm to simply take it by storm. We've seen that in self-driving cars, warehouse automation, cancer research, you name it.

It will take a lot of time to make the solutions robust, general, cheap, and easy to use.